HiPACC Data Science Press Room Archive

The Data Science Press Room highlights computational and data science news in all fields *outside of astronomy* in the UC campuses and DOE laboratories comprising the UC-HiPACC consortium. The wording of the short summaries on this page is based on wording in the individual releases or on the summaries on the press release page of the original source. Images are also from the original sources except as stated. Press releases below appear in reverse chronological order (most recent first); they can also be displayed by UC campus or DOE lab by clicking on the desired venue at the bottom of the left-hand column.

This page is the archive. Click for current data science press releases.

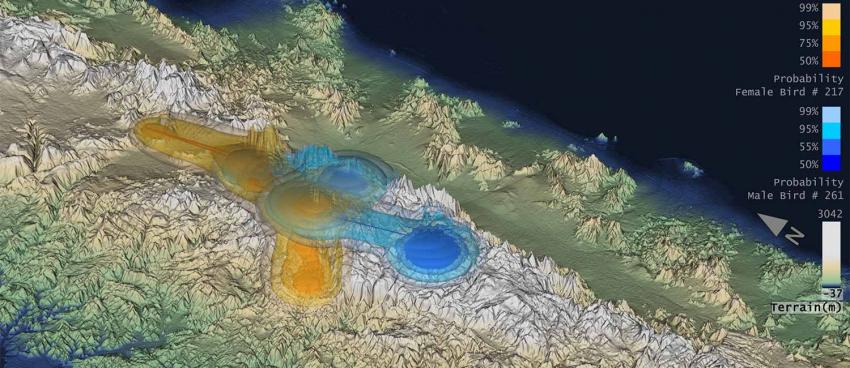

October 9, 2014 — Counting crows—and more

Male Scarlet Tanager (Piranga olivacea), a vibrant songster of eastern hardwood forests. These long-distance migrants move all the way to South America for the winter. Credit: Kelly Colgan Azar

UCSB 10/9/2014—As with the proverbial canary in a coal mine, birds are often a strong indicator of environmental health. Over the past 40 years, many species have experienced their own environmental crisis due to habitat loss and climate change. To fully understand bird distribution relative to environment requires extensive data beyond those amassed by a single institution. Enter DataONE: the Data Observation Network for Earth, a collaboration of distributed organizations with data centers and science networks, including the Knowledge Network for Biocomplexity (KNB) administered by UC Santa Barbara’s National Center for Ecological Analysis and Synthesis (NCEAS). Funded in 2009 as one of the initial NSF DataNet projects, DataONE has enhanced the efficiency of synthetic research—research that synthesizes data from many sources—enabling scientists, policymakers and others to more easily address complex questions about the environment. In its second phase, DataONE will target goals that enable scientific innovation and discovery while massively increasing the scope, interoperability and accessibility of data.

View UCSB Data Science Press Release

October 9, 2014 — UCLA receives $11 million grant to lead NIH Center of Excellence for Big Data Computing

Peipei Ping, principal investigator for UCLA’s new Center of Excellence for Big Data Computing. Credit: UCLA

UCLA 10/9/2014—The National Institutes of Health (NIH) has awarded UCLA $11 million to form a Center of Excellence for Big Data Computing. The Center will develop new strategies for mining and understanding the mind-boggling surge in complex biomedical data sets. The grant to UCLA was part of an initial $32 million outlay for the NIH’s $656 million Big Data to Knowledge (BD2K) initiative. As one of 11 centers nationwide, UCLA will create analytic tools to address the daunting challenges facing researchers in accessing, standardizing and sharing scientific data to foster new discoveries in medicine. Investigators also will train the next generation of experts and develop data science approaches for use by scientists. A key focus for the UCLA center will be creating and testing cloud-based tools for integrating and analyzing data about protein markers linked to cardiovascular disease. The center’s findings will help shape guidelines for future data integration and analysis, and the management of data from electronic health records.

View UCLA Data Science Press Release

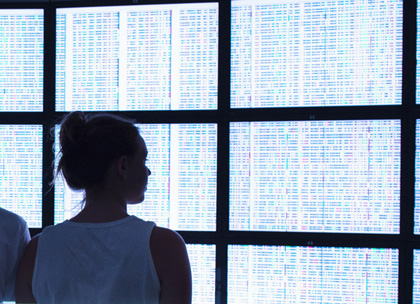

October 9, 2014 — UC Santa Cruz leads $11 million Center for Big Data in Translational Genomics

Credit: Elena Zhukova

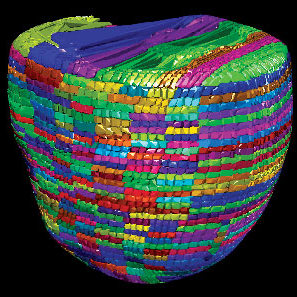

UCSC 10/9/2014—The National Institutes of Health (NIH) has awarded $11 million to UC Santa Cruz to create the technical infrastructure needed for the broad application of genomics in medicine and biomedical research. This grant from the National Human Genome Research Institute (NHGRI) funds the Center for Big Data in Translational Genomics, a multi-institutional partnership based at UC Santa Cruz and led by David Haussler, professor of biomolecular engineering and director of the UC Santa Cruz Genomics Institute. The Center’s overarching goal is to help the biomedical community use genomic information to better understand human health and disease. To do this, scientists must be able to share and analyze genomic datasets that are orders of magnitude larger than those that can be handled by the existing infrastructure. Advances in DNA sequencing technology have made it increasingly affordable to sequence a person's entire genome, but managing genomic and related data from millions of individuals is a daunting challenge. The Center for Big Data in Translational Genomics will develop new protocols and tools for genomic data and test them in four pilot projects.

View UCSC Data Science Press Release

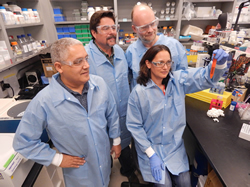

October 7, 2014 — Bio researchers receive patent to fight superbugs

Lawrence Livermore National Laboratory scientists (front row left to right) Matt Coleman and Feliza Bourguet and (back row left to right) Brian Souza and Patrik D'haeseleer received a patent for developing a computational genomic technique to fight superbugs (antibiotic-resistant bacteria). Credit: Julie Russell/LLNL

LLNL 10/7/2014—Superbugs, or antibiotic-resistant bacteria, have been on the rise since antibiotics were first introduced 80 years ago, as a result of antibiotics having been being overprescribed and misused, allowing bacteria pathogens to develop immunities against them. As a result, superbugs sicken nearly 2 million Americans each year, of whom roughly 23,000 die. Lawrence Livermore National Laboratory (LLNL) scientists have now received a patent for producing antimicrobial compounds that degrade and destroy antibiotic-resistant bacteria by using a pathogen’s own genes against it. Their technique uses computational tools and genome sequencing to identify which genes inside a bacterium encode for lytic proteins—enzymes that normally produce nicks in cell walls that allow cells to divide and multiply. But in high concentrations, the enzymes rapidly degrade and rupture cell walls. Lytic proteins circumvent any defenses that bacteria have developed. The LLNL approach can be used to fight superbugs such as antibiotic-resistant E. coli, Salmonella, Campylobacter, Methicillin-resistant Staphylococcus aureus (MRSA), Bacillus anthracis and many others.

View LLNL Data Science Press Release

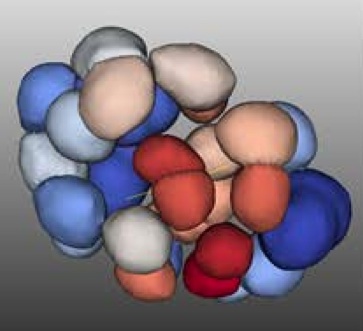

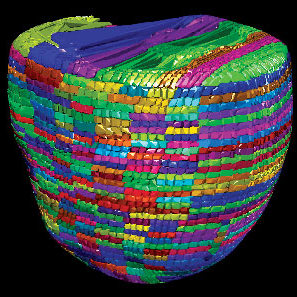

October 6, 2014 — RCSB Protein Data Bank launches mobile application

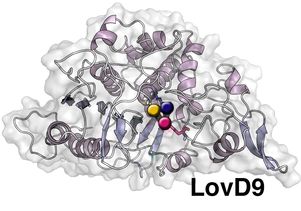

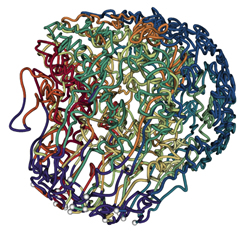

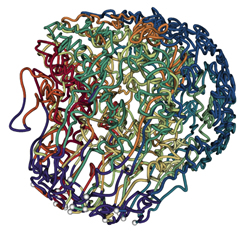

Credit: RCSB Protein Data Bank

SDSC 10/6/2014—The RCSB Protein Data Bank (PDB), which recently archived its 100,000th molecule structure, has introduced a free mobile application device that enables the general public and expert researchers to quickly search and visualize the 3D shapes of proteins, nucleic acids, and molecular machines. “As the mobile web is starting to surpass desktop and laptop usage, scientists and educators are beginning to integrate mobile devices into their research and teaching,” said Peter Rose, a researcher with the San Diego Supercomputer Center (SDSC) at UC San Diego, and Scientific Lead with the RCSB PDB. “In response, we have developed this application for iOS and Android mobile platforms to enable fast and convenient access to RCSB PDB data and services.” The goal was to produce an intuitive app with a simple search interface, quick browsing of search results, a view of basic data about a structure entry and its Pub-Med abstract, and high-performance molecular visualization. In addition, the app provides access to the RCSB PDB

Molecule of the Month educational series, and can be used to store personal notes and annotations.

View SDSC Data Science Press Release

October 6, 2014 — The ocean’s future

This San Miguel Island rock wall is covered with a diverse community of marine life. Credit: Santa Barbara Coastal Long-Term Ecological Research Program

UCSB 10/6/2014—Is life in the oceans changing over the years? Are humans causing long-term declines in ocean biodiversity with climate change, fishing and other impacts? At present, scientists are unable to answer these questions because little data exist for many marine organisms, and the small amount of existing data focuses on small, scattered areas of the ocean. A group of researchers from UCSB, the United States Geological Survey (USGS), National Oceanic and Atmospheric Administration (NOAA) National Marine Fisheries Service, and UC San Diego’s Scripps Institution of Oceanography is creating a new prototype system—the Marine Biodiversity Observation Network—to solve this problem. The network will integrate existing data over large spatial scales using geostatistical models and will utilize new technology to improve knowledge of marine organisms. UCSB’s Center for Bio-Image Informatics will use advanced image analysis to automatically identify different species including fish. In addition to describing patterns of biodiversity, the project will use mathematical modeling to examine the value of information on biodiversity in making management decisions as well as the cost of collecting that information in different ways. The five-year $5 million project will center on the Santa Barbara Channel, but the long-term goal is to expand the network around the country and around the world to track over time the biodiversity of marine organisms, from microbes to whales.

View UCSB Data Science Press Release

October 6, 2014 — Genomic sequencing research funded by Moore Foundation award

UCD 10/6/2014—The Gordon and Betty Moore Foundation has announced the selection of 14 Moore Investigators in Data-Driven Discovery. Among them is C. Titus Brown, visiting associate professor of population health and reproduction at the UC Davis School of Veterinary Medicine. Brown will be awarded $1.5 million over five years to support his research on genomic sequencing at UCD when he arrives in January 2015. Brown uses novel computer science tools to explore large genomic sequencing data sets. While at UCD, he will lead the Laboratory of Data Intensive Biology, which works across developmental biology, molecular biology, bioinformatics, metagenomics, and next-generation sequencing to build better biological understanding. With the grant, Brown is planning to build open software to help biologists discover patterns in large distributed data sets—key to understanding ecology and evolution.

View UCD Data Science Press Release

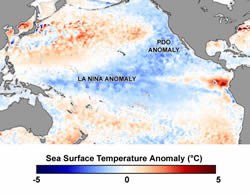

October 5, 2014 — Livermore scientists suggest ocean warming in Southern Hemisphere underestimated

The Antarctic Ocean is a remote place where icebergs frequently drift off the Antarctic coast and can be seen during their various stages of melting. This iceberg, sighted off the Amery Ice Shelf, also has bands of translucent blue ice formed by sea or freshwater freezing in bands between layers of more compressed and white glacial ice. Credit: Andrew Meijers/BAS

LLNL 10/5/2014—Using satellite observations and a large suite of climate models, Lawrence Livermore scientists have found that long-term ocean warming in the upper 700 meters of Southern Hemisphere oceans has likely been underestimated. “This underestimation is a result of poor sampling prior to the last decade and limitations of the analysis methods that conservatively estimated temperature changes in data-sparse regions,” said LLNL oceanographer Paul Durack, lead author of a paper appearing in the October 5 issue of the journal Nature Climate Change. Ocean heat storage is important because it accounts for more than 90 percent of the Earth’s excess heat that is associated with global warming. The observed ocean and atmosphere warming is a result of continuing greenhouse gas emissions. The Southern Hemisphere oceans make up 60 percent of the world’s oceans. The results suggest that global ocean warming has been underestimated by 24 to 58 percent. The conclusion that warming has been underestimated agrees with previous studies; however, this study is the first time that scientists have tried to quantify how much heat has been missed.

View LLNL Data Science Press Release

October 3, 2014 — Three faculty members awarded National Medal of Science

The late David Blackwell, former professor of mathematics and statistics

UCB 10/3/2014—Three UC Berkeley, faculty members were selected Oct. 3 by President Barack Obama to receive the National Medal of Science, the nation’s highest honor for a scientist. Two were mathematicians: Alexandre J. Chorin, 76, University Professor emeritus of mathematics and statistician David Blackwell (who died in 2010 at the age of 91). Chorin, who also is a Senior Faculty Scientist in the Mathematics Group at Lawrence Berkeley National Laboratory, introduced powerful new computational methods for the solution of problems in fluid mechanics. His methods are widely used to model airflow over aircraft wings and in turbines and engines, water flow in oceans and lakes, combustion in engines, and blood flow in hearts and veins. His methods have also contributed to the theoretical understanding of turbulent flow. Blackwell, the first black admitted to the National Academy of Sciences and the first tenured black professor in UC Berkeley history, was a mathematician and statistician who contributed to numerous fields, including probability theory, game theory and information theory. He chaired UC Berkeley’s Department of Statistics and served as president in 1955 of the Institute of Mathematical Statistics, an international professional and scholarly society.

View UCB Data Science Press Release

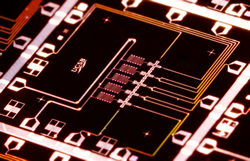

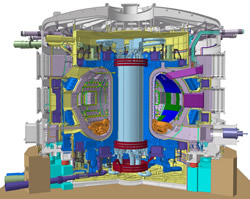

October 3, 2014 — Research opportunity announced for Quantum Artificial Intelligence Laboratory

NASA Ames 10/3/2014—The Universities Space Research Association (USRA) has announced a call for proposals to utilize the D-Wave Two quantum computer at NASA’s Quantum Artificial Intelligence Laboratory, located at the NASA Advanced Supercomputing facility at NASA Ames Research Center. The projects selected will have access to the computer from November 2014 through September 2015 in order to research artificial intelligence algorithms and advanced programming techniques for quantum annealing. The deadline for proposals is October 31, 2014.

NASA Ames link goes to USRA:

http://www.usra.edu/quantum/rfp/

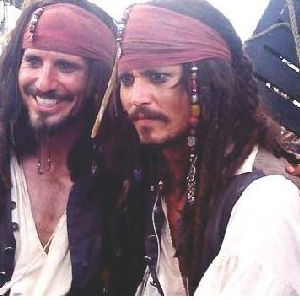

October 2, 2014 — Why we can’t tell a Hollywood heartthrob from his stunt double

A new study explains the visual mechanism behind our inability to tell actors from their stunt doubles, among other things.

UCB 10/2/2014—Johnny Depp has an unforgettable face. Tony Angelotti, his stunt double in Pirates of the Caribbean, does not. So why is it that when they’re swashbuckling on screen, audiences worldwide see them both as the same person? UC Berkeley scientists have cracked that mystery. Researchers have pinpointed the brain mechanism by which we latch on to a particular face even when it changes. In searching for an exact match to a “target” face on a computer screen, study participants consistently identified a face that was not the target face, but a composite of the faces they had seen over the past few seconds. Moreover, participants judged the match to be more similar to the target face than it really was. While it may seem as though our brain is tricking us into morphing, say, an actor with his stunt double, this “perceptual pull” is actually a survival mechanism, giving us a sense of stability, familiarity and continuity in what would otherwise be a visually chaotic world, researchers point out. The study was published Thursday, Oct. 2 in the online edition of the journal,

Current Biology.

View UCB Data Science Press Release

October 2, 2014 — Cybertools offer new channels for free speech, but grassroots organizing still critical

Kweku Opoku-Agyemang, a Development Impact Lab Postdoctoral Fellow at UC Berkeley’s Blum Center for Developing Economies, explains how the use of mobile technology that automates survey-taking can help expand the political power of the general public in Ghana. Credit: UC Berkeley video produced by Roxanne Makasdjian and Phil Ebiner

UCB 10/2/2014—Communication tools today have changed social movements since the Free Speech Movement of 50 years ago. Online petitions or survey software make it easier for users to register their opinions for elected officials while fast response times enable organizers to orchestrate logistics or respond to developing events. Reported efforts by Chinese officials to censor news of the current pro-democracy protests in Hong Kong by disrupting access to Instagram and removing references to the demonstrations illustrate the degree to which social media is seen as a threat. On the internet, however, every action leaves a trace, opening up the potential for surveillance. Cybersecurity experts have identified malware called Xsser that infects the operating systems of Apple mobile devices. The virus, with code written in Chinese, is capable of stealing text messages, call logs, photos and passwords. Experts believe Xsser is targeting pro-democracy protesters in Hong Kong. Without anonymity, the users are vulnerable to being tracked and persecuted.

View UCB Data Science Press Release

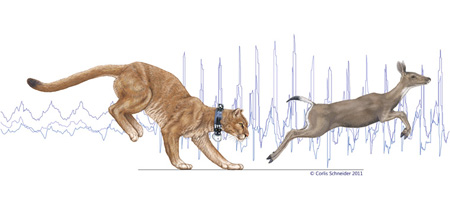

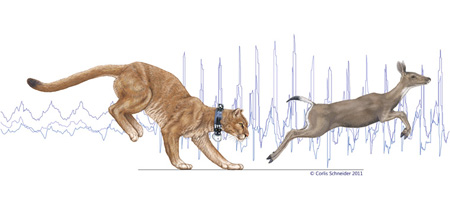

October 2, 2014 — Study of mountain lion energetics shows the power of the pounce

In the background of this illustration are typical SMART collar accelerometer traces for walking and then running, while the foreground shows a collared puma chasing a black-tailed deer. Credit: Corlis Schneider

UCSC 10/2/2014—Scientists at UC Santa Cruz, using a new wildlife tracking collar developed by a computer engineering graduate student, were able to continuously monitor the movements of mountain lions in the wild and determine how much energy the big cats use to stalk, pounce, and overpower their prey. The research team’s findings, published October 3 in Science, help explain why most cats use a “stalk and pounce” hunting strategy. The new Species Movement, Acceleration, and Radio Tracking (SMART) wildlife collar—equipped with GPS, accelerometers, and other high-tech features—tells researchers not just where an animal is but what it is doing and how much its activities “cost” in terms of energy expenditure. Understanding the energetics of wild animals moving in complex environments is valuable information for developing better wildlife management plans.

View UCSC Data Science Press Release

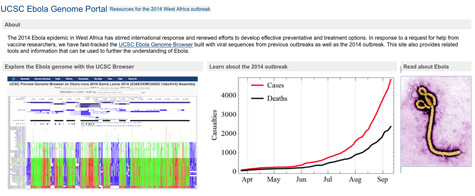

October 1, 2014 — UCSC Ebola genome browser now online to aid researchers’ response to crisis

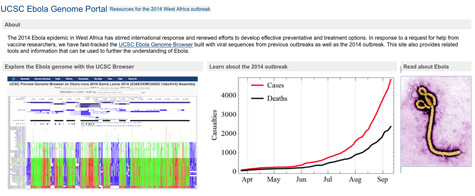

The UCSC Ebola Genome Portal contains links to the newly created Ebola browser and to scientific literature on the deadly virus.

UCSC 10/1/2014—A new Ebola bioinformatics tool—a genome browser to assist global efforts to develop a vaccine and antiserum to help stop the spread of the Ebola virus—was released on September 30 by the UC Santa Cruz Genomics Institute. UCSC has established the UCSC Ebola Genome Portal, with links to the new Ebola genome browser as well as links to all the relevant scientific literature on the virus. Scientists around the world can access the open-source browser to compare genetic changes in the virus genome and areas where it remains the same. The browser allows scientists and researchers from drug companies, other universities, and governments to study the virus and its genomic changes as they seek a solution to halt the epidemic. In a similar marshaling of forces in the face of a worldwide threat 11 years ago, UCSC researchers created a SARS virus browser.

View UCSC Data Science Press Release

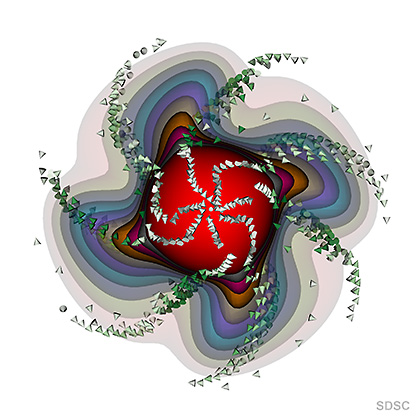

October 1, 2014 — SDSC granted $1.3 million award for ‘SeedMe.org’ data sharing infrastructure

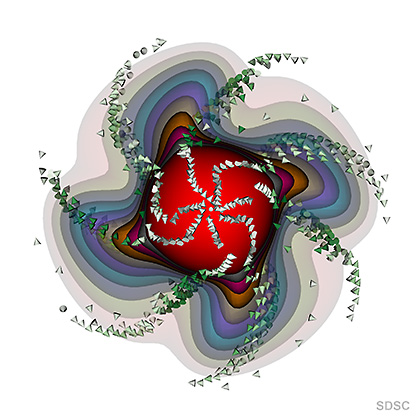

This image and related research data, one of numerous projects being shared and stored using SeedMe, shows a simple model of a geodynamo used for benchmark codes. The view is from center toward one of the poles, and the cones show convective flow toward higher temperature (light green to dark green with increasing velocity) in a spiraling form caused by rotation. The shells of various colors depict temperature, increasing from the outer boundary towards the interior. Credit: Amit Chourasia, Ashley Willis, Maggie Avery, Chris Davies, Catherine Constable, David Gubbins

SDSC 10/1/2014—Researchers at the San Diego Supercomputer Center (SDSC) at UC San Diego have received a three-year, $1.3 million award from the National Science Foundation (NSF) to develop a web-based resource that lets scientists seamlessly share and access preliminary results and transient data from research on a variety of platforms, including mobile devices. Called Swiftly Encode, Explore and Disseminate My Experiments (SeedMe), the new award is from NSF’s Data Infrastructure Building Blocks (DIBBs) program, part of the foundation’s Cyberinfrastructure Framework for 21st Century Science and Engineering (CIF21). Current methods for sharing and assessing transient data and preliminary results are cumbersome, labor intensive, and largely unsupported by useful tools and procedures. “SeedMe provides an essential yet missing component in current high-performance computing as well as cloud computing infrastructures,” said SDSC Director Michael Norman, co-principal investigator on the project.

View SDSC Data Science Press Release

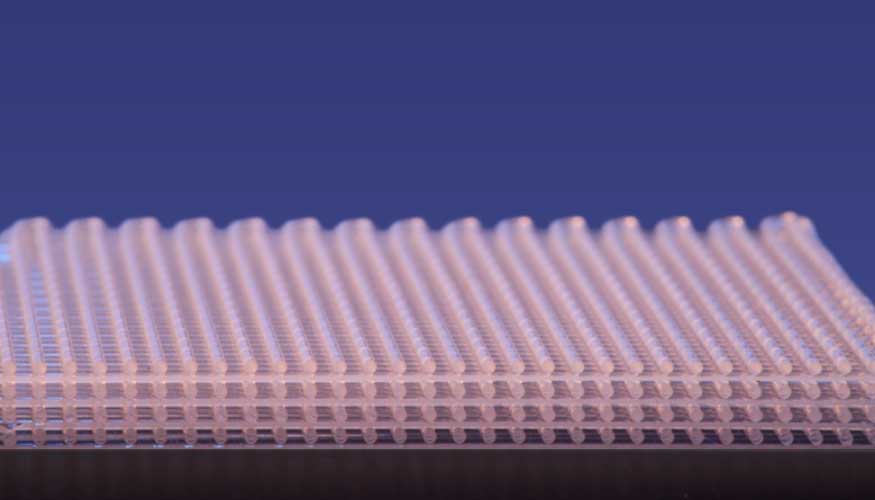

September 30, 2014 — NIH taps lab to develop sophisticated electrode array system to monitor brain activity

LLNL is developing an advanced electronics system to monitor and modulate neurons, to be packed with more than 1,000 tiny electrodes embedded in different areas of the brain to record and stimulate neural circuitry.

LLNL 9/30/2014—The National Institutes of Health (NIH) awarded Lawrence Livermore National Laboratory (LLNL) a grant to develop an electrode array system that will enable researchers to better understand how the brain works through unprecedented resolution and scale. LLNL’s project is part of NIH’s efforts to support President Obama’s Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Initiative, a new research effort to revolutionize our understanding of the human mind and uncover ways to treat, prevent and cure brain disorders. LLNL’s goal is to develop a system that will allow scientists to simultaneously study how thousands of neuronal cells in various brain regions work together during complex tasks such as decision making and learning. The biologically compatible neural system will be the first of its kind to have large-scale network recording capabilities that are designed to continuously record neural activities for months to years.

View LLNL Data Science Press Release

September 30, 2014 — NIH awards UC Berkeley $7.2 million to advance brain initiative

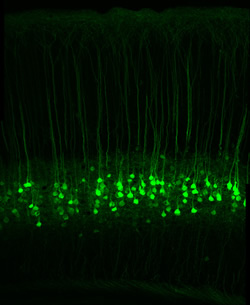

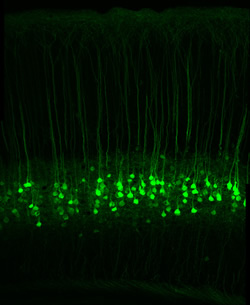

A section of the sensory cortex of a mouse in which cells known as long range projection neurons have been genetically modified to express a green fluorescent protein. John Ngai and colleagues will use single-cell genomics techniques to reveal the diversity of these and other neurons in the brain. Credit: David Taylor and Hillel Adesnik

UCB 9/30/2014—The National Institutes of Health today announced its first research grants through President Barack Obama’s BRAIN Initiative, including three awards to UC Berkeley (UCB), totaling nearly $7.2 million over three years. Among these is a new $5.6 million public-private collaboration between Carl Zeiss Microscopy and UCB to support the Berkeley Brain Microscopy Innovation Center (BrainMIC), which will fast-track microscopy development for emerging neurotechnologies and will run an annual course to teach researchers how to use the new technologies. Part of the Helen Wills Neuroscience Institute, the program will generate innovative devices and analytic tools in engineering, computation, chemistry and molecular biology to enable transformative brain science from studies of human cognition to neural circuits in model organisms.

View UCB Data Science Press Release

September 29, 2014 — At the interface of math and science

Model of vesicle adhesion, rupture and island dynamics during the formation of a supported lipid bilayer (from work by Atzberger et al.) featured on the cover of the journal Soft Matter. Credit: Peter Allen

UCSB 9/29/2014—New mathematical approaches—developed by Paul Atzberger, UC Santa Barbara professor of mathematics and mechanical engineering and his graduate student Jon Karl Sigurdsson, and other coauthors—reveal insights into how proteins move around within lipid bilayer membranes. These microscopic structures can form a sheet that envelopes the outside of a biological cell in much the same way that human skin serves as the body’s barrier to the outside environment. “It used to be just theory and experiment,” Atzberger said. “Now computation serves an ever more important third branch of science. With simulations, one can take underlying assumptions into account in detail and explore their consequences in novel ways. Computation provides the ability to grapple with a level of detail and complexity that is often simply beyond the reach of pure theoretical methods.” Their work was published in

Proceedings of the National Academy of Science (PNAS) and featured on the cover of the journal

Soft Matter.

View UCSB Data Science Press Release

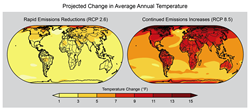

September 25, 2014 — Climate, Earth system project draws on science powerhouses

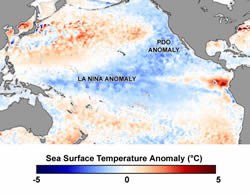

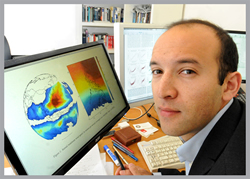

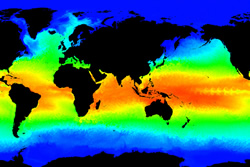

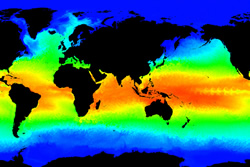

Computer modeling provides policymakers with essential information on such data as global sea surface temperatures related to specific currents.

LANL 9/25/2014—The US Department of Energy national laboratories are teaming with academia and the private sector to develop the most advanced climate and Earth system computer model yet created. Accelerated Climate Modeling for Energy (ACME) is designed to accelerate the development and application of fully coupled, state-of-the-science Earth system models for scientific and energy applications. The project—which includes seven other national laboratories, four academic institutions, and one private-sector company—will focus initially on three climate-change science drivers and corresponding questions to be answered during the project's initial phase: water cycle, biogeochemistry, and cryosphere systems. Over a planned decade, the project aims to conduct simulations and modeling on the most sophisticated high-performance computing systems machines as they become available: 100+ petaflop machines and eventually exascale supercomputers.

View LANL Data Science Press Release

September 25, 2014 — Three Bay Area institutions join forces to seed transformative brain research

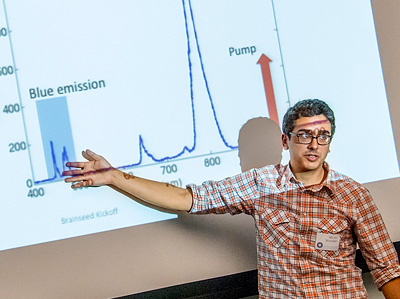

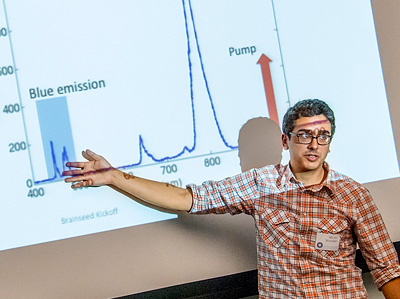

Michel Maharbiz of electrical engineering and computer science describes a project to probe more deeply into the cerebral cortex. Credit: Roy Kaltschmidt/LBNL

UCB 9/25/2014—UC Berkeley, UC San Francisco, and Lawrence Berkeley National Laboratory (LBNL) each put up $1.5 million over three years to seed innovative but risky research in a one-of-a-kind collaboration called BRAINseed. Among their projects is one for development of instrumentation and computational methods. Though great progress has been made in mapping the function of the human brain, researchers have been stymied by limitations in both recording devices and the ability to analyze and understand brain signals. UCSF’s Edward F. Chang, M.D., is leading a team that aims to achieve up to a thousandfold increase in the density and electronic sophistication of recording arrays. The vast amount of data collected by these arrays will be stored and analyzed by some of the world’s most powerful computers at the National Energy Research Scientific Computing Center (NERSC), enabling a new level of understanding of the brain in both health and disease. Chang’s collaborators are Peter Denes and Kristofer Bouchard of LBNL and Fritz Sommer of UCB.

View UCB Data Science Press Release

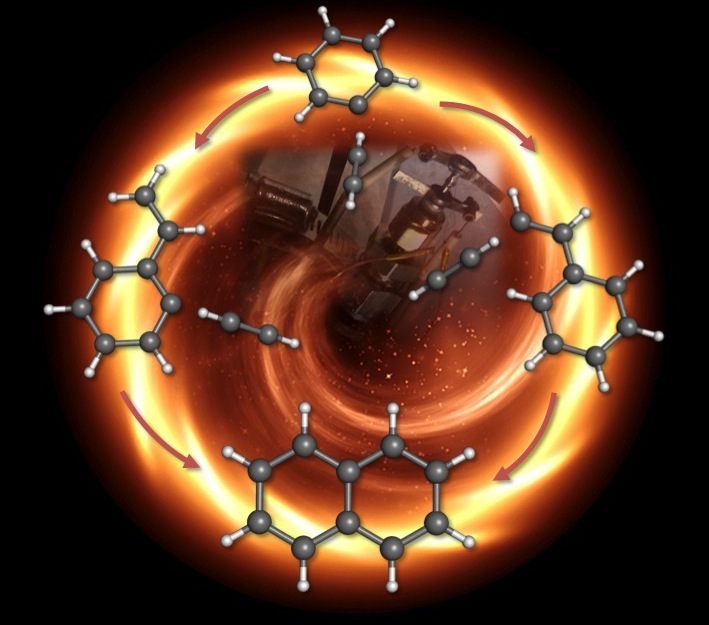

September 24, 2014 — Human genome was shaped by an evolutionary arms race with itself

An evolutionary arms race has shaped the genomes of primates, including humans. Credit: David Greenberg

UCSC 9/28/2014— New findings by scientists at UC Santa Cruz suggest that an evolutionary arms race between rival elements within the genomes of primates drove the evolution of complex regulatory networks that orchestrate the activity of genes in every cell of our bodies. The arms race is between mobile DNA sequences known as “retrotransposons”—nicknamed “jumping genes”—and the genes that have evolved to control them. The UC Santa Cruz researchers have, for the first time, identified genes in humans that make repressor proteins to shut down specific jumping genes. The researchers also traced the rapid evolution of the repressor genes in the primate lineage. The study involved close collaboration between a “wet lab” for developing genetic assays and a “dry lab” where researchers used computational tools of genome bioinformatics to reconstruct the evolutionary history of primate genomes. Their findings are published in the September 28 issue of

Nature.

View UCSC Data Science Press Release

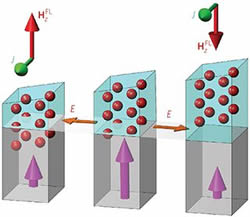

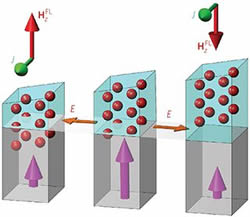

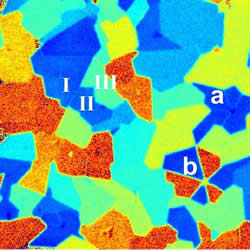

September 23, 2014 — NERSC helps corroborate two distinct mechanisms in ferroelectric material

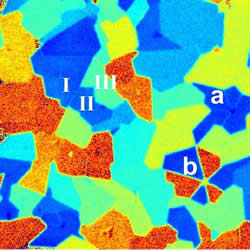

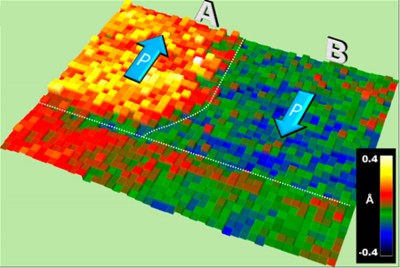

Collaborators from Korea, Norway, Ukraine and the United States analyzed atomic-scale polarization behavior and chemical composition for a ferroelectric (BFO) film on a metal (LSMO) to reveal electrically driven chemical changes that may someday be manipulated in novel oxide electronic devices. Credit: Y.-M. Kim et al./ORNL

NERSC 9/23/2014—Complex oxide crystals—which combine oxygen atoms with assorted metals—have long tantalized the materials science community with their promise in next-generation energy and information technologies. Because their electrons interact strongly with their environments, complex oxides are versatile, existing as insulators, metals, magnets and superconductors. They can tightly couple diverse physical properties, such as stress and strain, magnetism and magnetic order, electric field and polarization. In highly correlated electron systems, physical properties are interconnected like a tangle of strings: often pulling one string takes others with it. Increased understanding of the properties of complex oxides will improve the ability to predict and control materials for new energy technologies. One project has already led to a surprising discovery—that intrinsic electric fields can drive oxygen diffusion at interfaces in engineered thin films made of complex oxides. An Oak Ridge National Laboratory research team used supercomputing resources at the Department of Energy’s National Energy Research Scientific Computing Center (NERSC) to help confirm their findings. The study is published in the September issue of

Nature Materials.

View NERSC Data Science Press Release

September 23, 2014 — UC Santa Cruz establishes Symantec Presidential Chair in Storage and Security

Ethan Miller directs the UC Santa Cruz Center for Research in Storage Systems. Credit: C. Lagattuta

UCSC 9/23/2014—A $500,000 gift to UC Santa Cruz from computer security company Symantec, plus matching funds from the UC Presidential Match for Endowed Chairs, will establish a $1 million endowment at UC Santa Cruz for the Symantec Presidential Chair in Storage and Security at UCSC’s Baskin School of Engineering. The endowed chair supports research and teaching in the engineering school’s Department of Computer Science, which has strong programs in computer security and data storage systems. Ethan Miller, professor of computer science and director of the Center for Research in Storage Systems at UC Santa Cruz, has been appointed as the inaugural holder of the new chair. The Baskin School of Engineering is home to world-class faculty in data storage systems and other key areas of data science. Symantec’s gift is a significant contribution to the Data Science Leadership Initiative of the $300-million Campaign for UC Santa Cruz.

View UCSC Data Science Press Release

September 23, 2014 — Los Alamos researchers uncover new properties in nanocomposite oxide ceramics for reactor fuel, fast-ion conductors

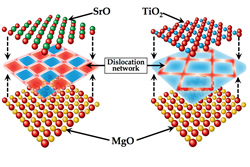

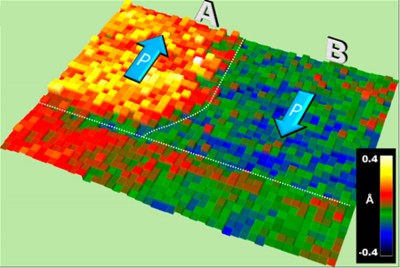

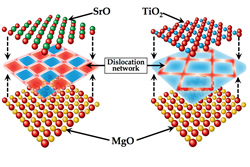

Schematic depicting distinct dislocation networks for SrO- and TiO2-terminated SrTiO3/MgO interface

LANL 9/23/2014—Nanocomposite oxide ceramics have potential uses as ferroelectrics, fast ion conductors, and nuclear fuels and for storing nuclear waste. A composite is a material containing grains, or chunks, of several different materials; in a nanocomposite, the size of each grain is on the order of nanometers, roughly 1000 times smaller than the width of a human hair. Interfaces where the different materials meet are regions of unique electronic and ionic properties, which could enhance conductivity of batteries and fuel cells. Los Alamos National Laboratory (LANL) simulations that explicitly account for the position of each atom within two different materials reveal that some interfaces exhibit remarkably different atomic structures: misfit dislocations that form when the two materials do not exactly match in size dictate the functional properties of the interface, such as the conductivity. The observed relationship between the termination chemistry and the dislocation structure of the interface offers potential avenues for tailoring transport properties and radiation damage resistance of oxide nanocomposites by controlling the termination chemistry at the interface. The research is described in a paper published in the journal

Nature Communications.

View LANL Data Science Press Release

September 22, 2014 — Linguists receive $260,000 grant to study endangered Irish language

Screen shot of ultrasound of tongue of native Irish speaker

UCSC 9/22/2014—Even though the Irish language is an official language of Ireland and has considerable government support, it is highly endangered: only 1.5% to 3% of the population regularly uses it in their communities and its future is in doubt. One unusual feature of the Irish language is that every consonant comes in two varieties—one where the tongue is raised and pushed forward, and one where it is raised and retracted. So, one important goal of researchers is to document that contrast—using ultrasonic real-time tongue imaging to non-invasively capture video of the tongue’s surface while it moves during speech. UC Santa Cruz has been awarded a $261,255 grant from the National Science Foundation to undertake a new project titled “Collaborative Research: An Ultrasound Investigation of Irish Palatalization” to take the ultrasound machine to Ireland, record native speakers of Irish in three major dialect regions of Irish that are isolated from one another, and to analyze the data. Analysis of the ultrasound data will also allow them to answer more general questions about speech production.

View UCSC Data Science Press Release

September 22, 2014 — Lawrence Livermore renews pact with Georgetown University to expand research and education in science and policy

LLNL Director Bill Goldstein and Georgetown President John DeGioia sign a memorandum of understanding on Friday, renewing the LLNL-Georgetown partnership and expanding areas of collaboration. Credit: Georgetown University

LLNL 9/22/2014—Lawrence Livermore National Laboratory (LLNL) Director Bill Goldstein and Georgetown University President John DeGioia on Friday renewed their institutional commitment by signing a memorandum of understanding for an additional five years to expand the collaborative work in the areas of cyber security, biosecurity, nonproliferation and global climate, energy and environmental sciences. This renewal represents a significant expansion of an MOU originally signed in December 2009 and is a framework to broaden LLNL collaborations university-wide, including the Georgetown University Medical Center. The new MOU expands the fields of study to include data science and data analytics; bio-security; emergency and disaster management; global climate, energy and environment; food safety and security; and biotechnology (including such fields as infectious diseases, drug discovery, regenerative medicine, and urban resilience). In data analytics, projects include a potential collaboration on a new master’s degree program as well as working together to create a shared computational infrastructure leveraging LLNL’s high-performance computing capabilities.

View LLNL Data Science Press Release

September 19, 2014 — Project launched to study evolutionary history of fungi

Jason Stajich is an associate professor of plant pathology and microbiology at UC Riverside. Credit: Stajich Lab/UC Riverside

UCR 9/19/2014—UC Riverside is one of 11 collaborating institutions that have received a total of $2.5 million from the National Science Foundation for a four-year project called the Zygomycete Genealogy of Life (ZyGoLife) focused on studying zygomycetes: ancient lineages of fungi used in numerous industrial processes and fermentation of foods. Thought to be among the first terrestrial fungi, zygomycetes represent one of the earliest origins of multicellular growth forms. Indeed, symbiotic associations with zygomycetes may have facilitated the origin of land plants. Their filamentous growth is in the form of the tube-like cell growth that characterizes species of fungi including bread and fruit molds, animal and human pathogens, and decomposers of a wide variety of organic compounds. Jason Stajich, associate professor of plant pathology and microbiology, is principal investigator. His UCR lab will be spearheading genome sequence analysis to better establish the family tree of fungi from these lineages and disseminating data into genomic databases such as MycoCosm of the Joint Genome Institute of the U.S. Department of Energy and FungiDB. The Stajich lab will also host visiting students and postdocs from the other teams to provide training in bioinformatics and evolutionary genomics in fungi.

View UCR Data Science Press Release

September 19, 2014 — Library taps specialist to explore role of technology in humanities research

Rachel Deblinger

UCSC 9/19/2014—The UC Santa Cruz University Library and Humanities Division have jointly awarded a two-year Council on Library and Information Resources (CLIR) Postdoctoral Fellowship supporting digital humanities scholarship to Rachel Deblinger. As a CLIR Digital Humanities Specialist, Deblinger will have the opportunity to build a community around digital humanities scholarship at a time when the practice is emerging at UCSC. Collaborating with librarians, faculty and students across multiple divisions, Deblinger will explore online collaborative research practices supporting digital humanities and develop a pilot infrastructure to support this research. She will also examine the role of the University Library supporting digital humanities, conduct workshops, and help to facilitate graduate research. Previously at UCLA, she served as technical consultant on the development of a textual database supporting the publication of

Sephardic Lives: A Documentary History of the Ottoman Judeo-Spanish World and its Diaspora, 1700-1950; and was the thematic expert at the UCLA Center for Digital Humanities for the computational visualization of Shoah Foundation Holocaust testimonies.

View UCSC Data Science Press Release

September 16, 2014 — The Exxon Valdez — 25 years later

Oil coated the rocky shoreline after the Exxon Valdez ran aground, leaking 10 to 11 million gallons of crude oil into the Gulf of Alaska on March 24, 1989. Credit: Alaska Resources Library & Information Services

UCSB 9/16/2014—UC Santa Barbara’s National Center for Ecological Analysis and Synthesis (NCEAS) has collaborated with investigators from Gulf Watch Alaska and the Herring Research and Monitoring Program to collate historical data from a quarter-century of monitoring studies on physical and biological systems altered by the 1989 Exxon Valdez oil spill. Now, two new NCEAS working groups will synthesize this and related data and conduct a holistic analysis to answer pressing questions about the interaction between the oil spill and larger drivers such as broad cycles in ocean currents and water temperatures. Both statistical and modeling approaches will be used to understand both mechanisms of change and the changes themselves, and to create an overview of past changes and potential futures for the entire area. The investigators will use time series modeling approaches to determine the forces driving variability over time in these diverse datasets. They will also examine the influences of multiple drivers, including climate forcing, species interactions and fishing. By evaluating species’ life history attributes, such as longevity and location, and linking them to how and when each species was impacted by the spill, the researchers may help predict ecosystem responses to other disasters and develop monitoring strategies to target vulnerable species before disasters occur.

View UCSB Data Science Press Release

September 16, 2014 — Human faces are so variable because we evolved to look unique

UCB 9/16/2014— The amazing variety of human faces—far greater than that of most other animals—is the result of evolutionary pressure to make each of us unique and easily recognizable, according to a new study by UC Berkeley scientists. They were able to assess human facial variability thanks to a U.S. Army database of body measurements compiled from male and female personnel in 1988. The researchers found that facial traits are much more variable than other bodily traits, such as the length of the hand. They also had access to data collected by the 1000 Genome project, which has sequenced more than 1,000 human genomes since 2008 and catalogued nearly 40 million genetic variations among humans worldwide. Looking at regions of the human genome that have been identified as determining the shape of the face, they found a much higher number of variants than for traits (such as height) not involving the face. They also compared the human genomes with recently sequenced genomes of Neanderthals and Denisovans and found similar genetic variation, which indicates that the facial variation in modern humans must have originated prior to the split between these different lineages. The study appeared Sept. 16 in the online journal

Nature Communications.

View UCB Data Science Press Release

September 15, 2014 — 2014 Berkeley-Rupp Prize for boosting women in architecture, sustainability announced

KVA Matx Soft Rockers night time illumination and gathering. Credit: Phil Seaton/Living Photo

UCB 9/15/2014—Sheila Kennedy, an internationally recognized architect, innovator and educator is the 2014 recipient of the Berkeley-Rupp Architecture Professorship and Prize. Awarded every two years, the Berkeley-Rupp Prize of $100,000 is given by UC Berkeley’s College of Environmental Design to a distinguished design practitioner or academic who has made a significant contribution to advance gender equity in the field of architecture, and whose work emphasizes a commitment to sustainability and community. As part of her research, Kennedy will partner with non-governmental organizations (NGOs) to engage communities of fabricators in three developing regions around the world. She will lead UC Berkeley students in computation, architectural design, engineering, and city planning in a series of hands-on design workshops exploring new urban infrastructure. Using soft materials—from paper to wood to bio-plastic—the group will develop open-source digital-fabrication techniques and create adaptable prototypes such as pop-up solar streetlights, soft refrigeration kits for bicycle vendors, and public benches that collect and clean fresh water.

View UCB Data Science Press Release

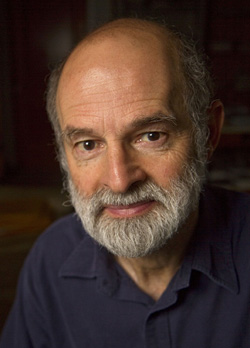

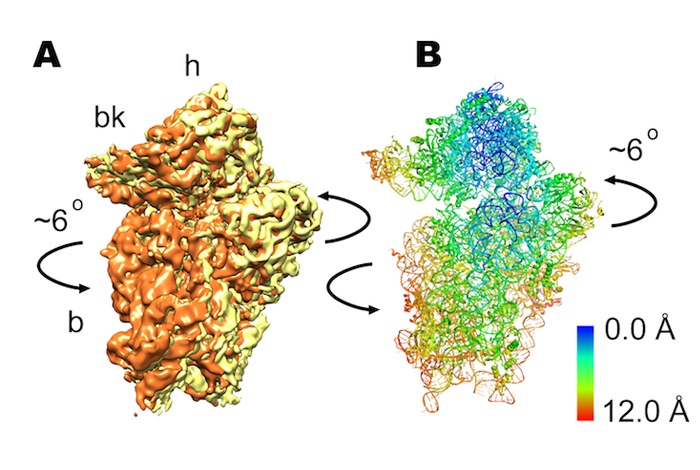

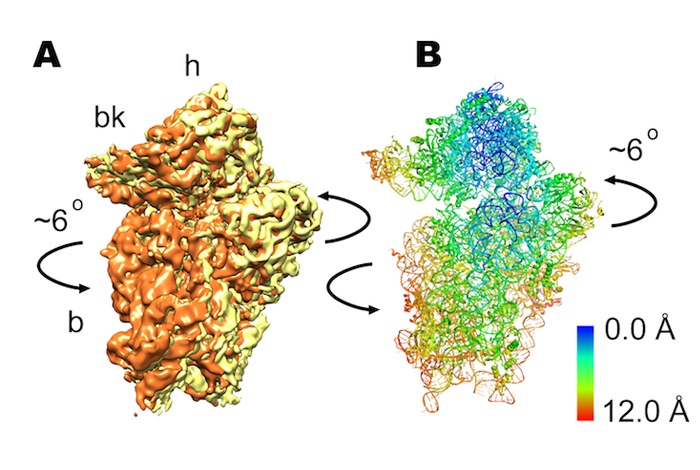

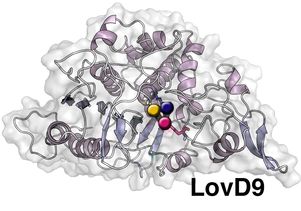

September 15, 2014 — Collaboration drives achievement in protein structure research

Thomas Terwilliger

LANL 9/15/2014—Computational analysis is key to structural understanding of a molecular machine that targets viral DNA. Researchers at Montana State University have provided the first blueprint of a bacterium’s “molecular machinery,” showing how bacterial immune systems fight off the viruses that infect them. By tracking down how bacterial defense systems work, the scientists can potentially fight infectious diseases and genetic disorders. The key is a repetitive piece of DNA in the bacterial genome called a CRISPR (Clustered Regularly Interspaced Short Palindromic Repeats). The bacterial genome uses the CRISPR to capture and “remember” the identity of an attacking virus. Now the scientists have created programmable molecular scissors, called nucleases, that are being exploited for precisely altering the DNA sequence of almost any cell type of interest. LANL—along with partners Lawrence Berkeley National Laboratory and Duke and Cambridge University—developed software to analyze the protein structure of the nuclease, which was key to understanding its function. The researchers reported their findings in the journal

Science.

View LANL Data Science Press Release

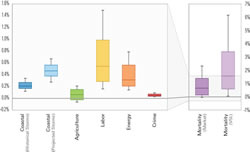

September 11, 2014 — Changing how we farm can save evolutionary diversity, study suggests

Diversified farms, such as this coffee plantation in Costa Rica, house substantial phylogenetic diversity. Credit: Daniel Karp

UCB 9/11/2014—A new study by biologists at Stanford University and UC Berkeley highlights the dramatic hit on the evolutionary diversity of wildlife when forests are transformed into agricultural lands. The researchers counted some 120,000 birds of nearly 500 species in three types of habitat in Costa Rica, and calculated the birds’ phylogenetic diversity, a measure of the evolutionary history embodied in wildlife. The study, published in the Sept. 12 issue of Science, found that the phylogenetic diversity of the birds fared worst in habitats characterized by intensive farmlands consisting of single crops. Such intensive monocultures supported 900 million fewer years of evolutionary history, on average, compared with untouched forest reserves. The researchers found a middle ground in diversified agriculture, or farmlands with multiple crops adjoined by small patches of forest. Such landscapes supported on average 600 million more years of evolutionary history than the single crop farms. This work is urgent, the authors say, because humanity is driving about half of all known life to extinction—including many species that play key roles in Earth’s life-support systems—mostly through agricultural activities to support our vast numbers and meat-rich diets.

UC Berkeley release

Stanford release:

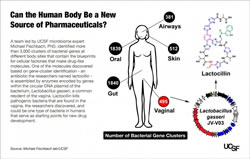

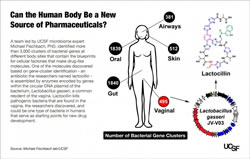

September 11, 2014 — Our microbes are a rich source of drugs, UCSF researchers discover

UCSF 9/11/2014—Bacteria that normally live in and upon us have genetic blueprints that enable them to make thousands of molecules that act like drugs, and some of these molecules might serve as the basis for new human therapeutics, according to UC San Francisco researchers in a study published in the Sept. 11 issue of the journal Cell. Microbiomes—ecosystems made up of many microbial species—are found in the gut, skin, nasal passages, mouth and vagina. Scientists have started to identify microbiomes in which species diversity and abundance differ from the normal range in ways that are associated with disease risks. By developing new data-analysis software and putting it to work on an extensive genetic database developed from human-associated bacterial samples collected as part of the National Institutes of Health’s ongoing Human Microbiome Project, the UCSF lab team identified clusters of bacterial genes that are switched-on in a coordinated way to guide the production of molecules that are biologically active in humans.

View UCSF Data Science Press Release

September 11, 2014 — Teaching computers the nuances of human conversation

Marilyn Walker. Credit: C. Lagattuta

UCSC 9/11/2014—Natural language processing is now so good that the failure of automated voice-recognition systems to respond in a natural way has become glaringly obvious, according to Marilyn Walker, UC Santa Cruz professor of computer science. One of Walker’s current projects, funded last year by the National Science Foundation, involves analyzing posts from online debate forums to see how people present facts to support arguments. By annotating the online posts to uncover patterns in word choice and sentence construction, researchers seek to build a program that can identify sarcasm, report a poster’s stance on a topic, and identify the arguments and counter-arguments for a particular topic. Such software could also be useful as an educational tool: psychological evidence suggests that a debate becomes less polarized if people are exposed to multiple arguments. Also, by changing how computers talk, it may create an unspoken relationship that strengthens our connections to devices. Ultimately, such technology could be used to create companion robots, navigation programs, or restaurant recommendation software that interact with us more naturally.

View UCSC Data Science Press Release

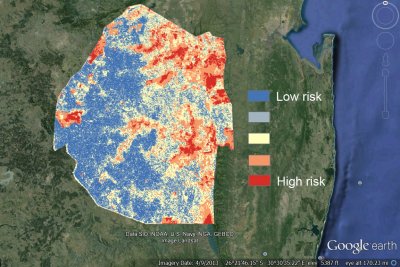

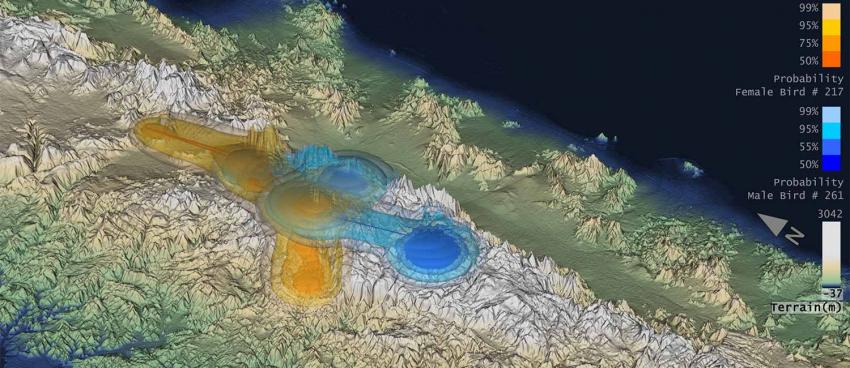

September 10, 2014 — UCSF, Google Earth Engine making maps to predict malaria

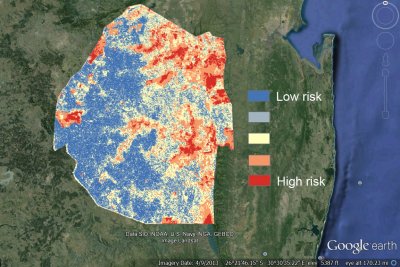

A sample risk map of malaria in Swaziland during the transmission season using data from 2011–2013.

UCSF 9/10/2014—UC San Francisco (UCSF) is working to create an online platform that health workers around the world can use to predict where malaria is likely to be transmitted using data on Google Earth Engine. The goal is to enable resource-poor countries to wage more targeted and effective campaigns against the mosquito-borne disease, which kills 600,000 people a year, most of them children. Google Earth Engine brings together the world’s satellite imagery—trillions of scientific measurements dating back almost 40 years—with data-mining tools for scientists, independent researchers and nations to detect changes, map trends and quantify differences on the Earth’s surface. Local health workers will be able to upload their own data on where and when malaria cases have been occurring and combine it with real-time satellite data on weather and other environmental conditions within Earth Engine to pinpoint where new cases are most likely to occur. That way, they can spray insecticide, distribute bed nets or give antimalarial drugs just to the people who still need them, instead of blanketing the entire country. By looking at the relationship between disease occurrence and factors such as rainfall, vegetation and the presence of water in the environment, the maps will also help health workers and scientists study what drives malaria transmission. The tool could also be adapted to predict other infectious diseases.

View UCSF Data Science Press Release

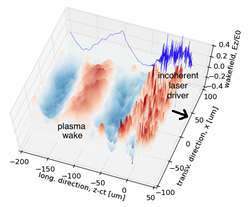

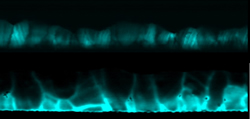

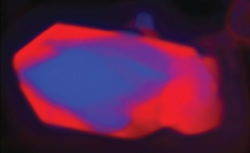

September 10, 2014 — Advanced Light Source sets microscopy record

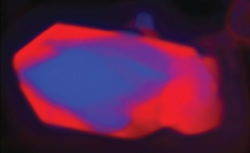

Ptychographic image using soft X-rays of lithium iron phosphate nanocrystal after partial dilithiation. The delithiated region is shown in red.

LBNL 9/10/2014—A record-setting X-ray microscopy experiment may have ushered in a new era for nanoscale imaging. Working at the U.S. Department of Energy (DOE)’s Lawrence Berkeley National Laboratory (LBNL), a collaboration of researchers used low energy or “soft” X-rays to image structures only five nanometers in size. This resolution, obtained at LBNL’s Advanced Light Source (ALS) is the highest ever achieved with X-ray microscopy. Using ptychography, a coherent diffractive imaging technique based on high-performance scanning transmission X-ray microscopy (STXM), the collaboration was able to map the chemical composition of lithium iron phosphate nanocrystals after partial dilithiation. The results yielded important new insights into a material of high interest for electrochemical energy storage. Key to the success of Shapiro and his collaborators were the use of soft X-rays which have wavelengths ranging between 1 to 10 nanometers, and a special algorithm that eliminated the effect of all incoherent background signals. The findings were published in the journal

Nature Photonics.

View LBNL Data Science Press Release

September 9, 2014 — The search for Ebola immune response targets

SDSC 9/9/2014—The effort to develop therapeutics and a vaccine against the deadly Ebola virus disease (EVD) requires a complex understanding of the microorganism and its relationship within the host, especially the immune response. Adding to the challenge, EVD can be caused by any one of five known species within the genus Ebolavirus (EBOV), in the Filovirus family. Now, researchers at the La Jolla Institute for Allergy and Immunology (La Jolla Institute) and the San Diego Supercomputer Center (SDSC) at UC San Diego are assisting the scientific community by running high-speed online publications of analysis of data in the Immune Epitope Data Base (IEDB), and predicting epitopes using the IEDB Analysis Resource.

View SDSC Data Science Press Release

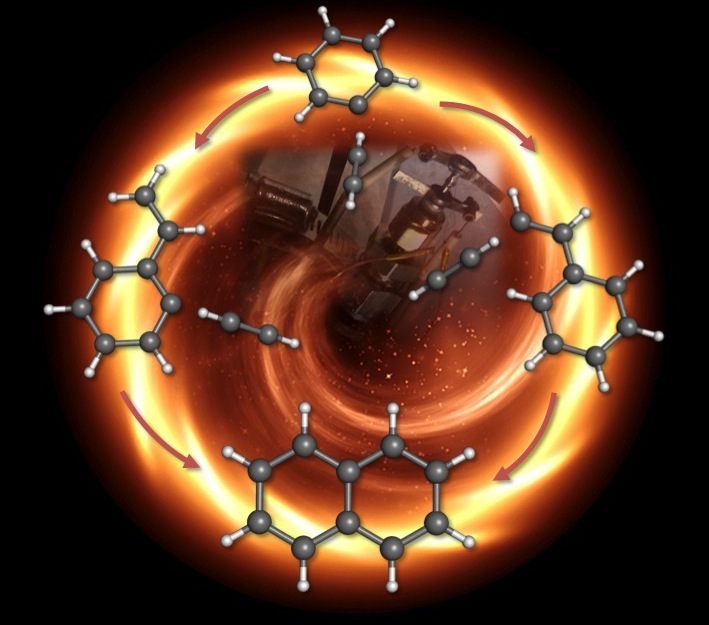

September 9, 2014 — SDSC joins the Intel Parallel Computing Centers Program

SDSC 9/9/2014—The San Diego Supercomputer Center (SDSC) at UC San Diego is working with semiconductor chipmaker Intel Corporation to further optimize research software to improve the parallelism, efficiency, and scalability of widely used molecular and neurological simulation technologies. The collaboration is part of the Intel Parallel Computing Centers program, which provides funding to universities, institutions, and research labs to modernize key community codes used across a wide range of disciplines to run on current industry-standard parallel architectures. SDSC researchers are expanding the Intel relationship to cover additional research areas and software packages, including improving the performance of simulations on manycore architectures, to allow researchers to study chemical reactions directly, without severe approximations. With President Obama’s announcement of the BRAIN initiative in April 2013, many are predicting computational neuroscience will have a scientific impact to rival what computational genomics had during the last decade.

View SDSC Data Science Press Release

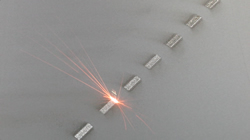

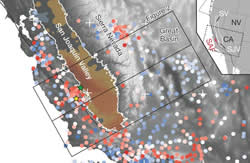

September 9, 2014 — Simulating the south Napa earthquake

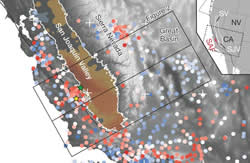

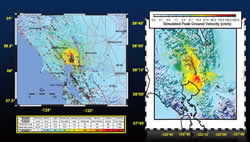

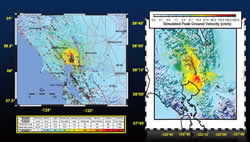

Researchers are using data from the South Napa earthquake to help validate models. These figures compare the observed intensity of ground motion from seismic stations of the California Integrated Seismic Network “ShakeMap” (left) with the simulated shaking (peak ground velocity) from SW4 simulations (right).

LLNL 9/9/2014—A Lawrence Livermore seismologist Artie Rodgers is tapping into LLNL's supercomputers to simulate the detailed ground motion of August’s magnitude 6.0 south Napa earthquake, the largest to hit the San Francisco Bay Area since the magnitude 6.9 Loma Prieta event in 1989. Using descriptions of the earthquake source from the UC Berkeley Seismological Laboratory, Rodgers is determining how the details of the rupture process and 3D geologic structure, including the surface topography, may have impacted the ground motion. Seismic simulations allow scientists to better understand the distribution of shaking and damage that can accompany earthquakes, including possible future “scenario” earthquakes. Simulations are only as valid as the elements going into them; thus the recent earthquake provides data to validate methods and models.

View LLNL Data Science Press Release

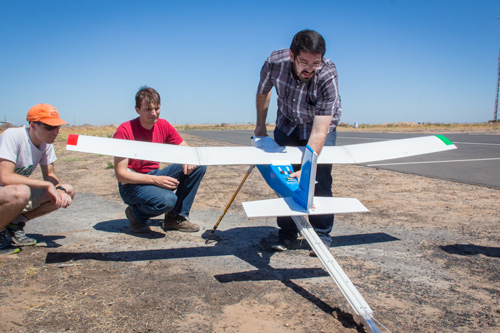

September 8, 2014 — Los Alamos conducts important hydrodynamic experiment in Nevada

Technicians at the Nevada National Security Site make final adjustments to the "Leda" experimental vessel in the "Zero Room" at the underground U1a facility.

LANL 9/8/14—On August 12, 2014, Los Alamos National Laboratory (LANL) successfully fired the latest in a series of experiments at the Nevada National Security Site (NNSS). The experiment, called Leda, consisted of a plutonium surrogate material and high explosives to implode what weapon physicists call a “weapon-relevant geometry.” Hydrodynamic experiments such as Leda involve surrogate non-nuclear materials that mimic many of the properties of nuclear materials. Hydrodynamics refers to the fact that solids, under extreme conditions, begin to mix and flow like liquids. Scientists will now study the data in detail and compare with pre-shot predictions. The resulting findings will help physicists assess their ability to predict weapon-relevant physics and model weapon performance in the absence of full-scale underground nuclear testing.

View LANL Data Science Press Release

September 5, 2014 — When good software goes bad

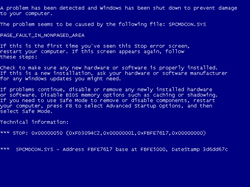

This so-called Blue Screen of Death is often the result of errors in software. Credit: Courtesy Image

UCSB 9/5/2014—With computing distributed across multiple machines on the cloud, errors and glitches are not easily detected before software is rolled out to the public. As a result, bugs manifest themselves after the programs have been downloaded, costing a software company time, money and even user confidence, and it can leave devices vulnerable to security breaches. With a grant of nearly $500,000 from the National Science Foundation, UC Santa Barbara computer scientist Tevfik Bultan and his team are studying verification techniques that can catch and repair bugs in code that manipulates and updates data in web-based software applications. Using techniques that translate software data into code that can be evaluated with mathematical logic, Bultan’s team can verify the soundness of any particular software. By automating the process and adding steps to update the software as needed, crashes, perpetuated errors, vulnerabilities and other glitches will take up less time and money.

View UCSB Data Science Press Release

September 4, 2014 — A Q&A on the future of digital health

Michael Blum, director of the Center for Digital Health Innovation, speaks at a recent conference.

UCSF 9/4/2014—We recently sat down with Michael Blum, director of UCSF’s Center for Digital Health Innovation, to talk about the future of health wearables and what more accurate health data could teach us about improving patient care. Among the topics he brought up: One of the first things we are going to find is that a lot of real world health data and information about the general public looks very different than it did in highly controlled clinical trials. When we start to get these very large, detailed real-world data sets we may be very surprised to see the answers.

View UCSF Data Science Press Release

September 2, 2014 — Sierra Nevada freshwater runoff could drop 26 percent by 2100, UC study finds

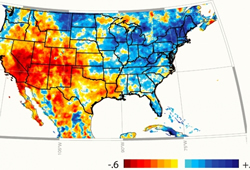

.jpg)

The Sierra Nevada snowpack runoff will diminish as a warmer climate encourages more plant growth at higher temperatures, a UC Irvine and UC Merced study has determined. Credit: Matt Meadows /UC Merced

UCI/UCM 9/2/2014—By 2100, communities dependent on freshwater from mountain-fed rivers could see significantly less water, according to a new climate model recently released by researchers at UC Irvine and UC Merced. As the climate warms, higher elevations that are usually snow-dominated see milder temperatures; plants that normally go dormant during the winter snows remaining active longer, absorbing and evaporating more water, reducing projected runoff. Using water-vapor emission rates and remote-sensing data, the authors determined relationships between elevation, climate and envirotranspiration. Greater vegetation density at higher elevations in the Kings basin with the 4.1 degrees Celsius warming projected by climate models for 2100 could boost basin evapotranspiration by as much as 28 percent, with a corresponding 26 percent decrease in river flow. The study findings appear in Proceedings of the National Academy of Sciences. Scientists have recognized for a while that something like this was possible, but no one had been able to quantify whether it could be an effect big enough to concern California water managers.

UCM release

UCI Release

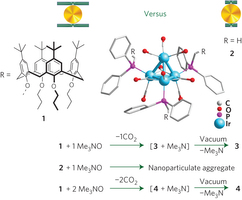

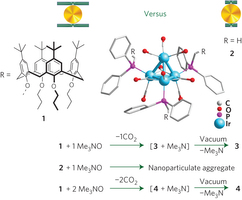

September 5, 2014 — New catalyst converts CO₂ to fuel

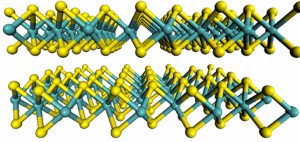

Structure of 2D molybdenum disulfide. Molybdenum atoms are shown in teal, sulfur atoms in yellow. Credit: Wang et al, Massachusetts Institute of Technology

LBNL/NERSC 9/5/2014—Scientists from the University of Illinois at Chicago have synthesized a catalyst that improves their system for converting waste carbon dioxide (CO₂) into syngas, a precursor of gasoline and other energy-rich products, bringing the process closer to commercial viability. The unique two-step catalytic process uses molybdenum disulfide and an ionic liquid to transfer electrons to CO₂ in a chemical reaction. The new catalyst improves efficiency and lowers cost by replacing expensive metals like gold or silver in the reduction reaction, directly reducing CO₂ to syngas (a mixture of carbon monoxide plus hydrogen) without the need for a secondary, expensive gasification process. Supercomputing resources at the U.S. Department of Energy’s National Energy Research Scientific Computing Center (NERSC) helped the research team confirm their experimental findings.

View LBNL,NERSC Data Science Press Release

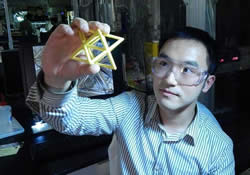

September 2, 2014 — Truly secure e-commerce: quantum crypto-keys

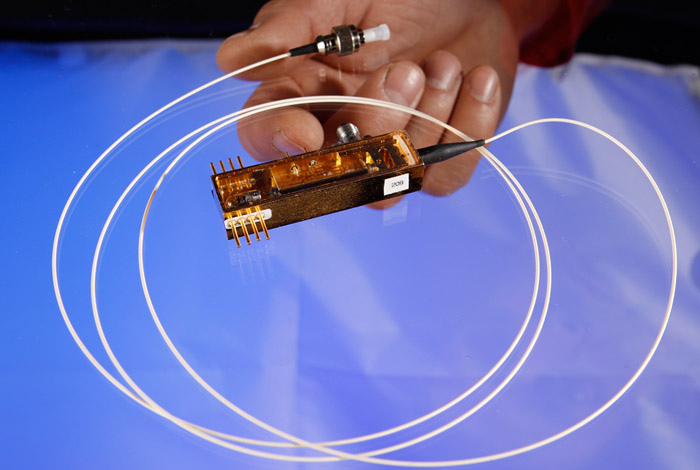

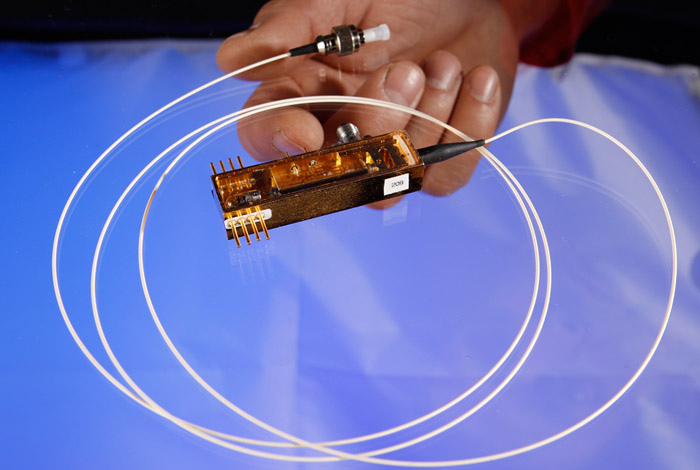

This small device developed at Los Alamos National Laboratory uses the truly random spin of light particles as defined by laws of quantum mechanics to generate a random number for use in a cryptographic key that can be used to securely transmit information between two parties.

LANL 9/2/2014—The largest information technology agreement ever signed by Los Alamos National Laboratory (LANL) brings the potential for truly secure data encryption to the marketplace after nearly 20 years of development. By harnessing the quantum properties of light for generating random numbers, and creating cryptographic keys with lightning speed, the technology enables a completely new commercial platform for real-time encryption at high data rates. If implemented on a wide scale, quantum key distribution technology could ensure truly secure commerce, banking, communications and data transfer. The Los Alamos technology is simple and compact enough that it could be made into a unit comparable to a computer thumb drive or compact data-card reader. Units could be manufactured at extremely low cost, putting them within easy retail range of ordinary electronics consumers.

View LANL Data Science Press Release

September 3, 2014 — UCLA-led consortium to focus on developing a new architecture for the Internet

UCLA 9/3/2014—Launching a critical new phase in developing the Internet of the future, UCLA will host a consortium of universities and leading technology companies to promote the development and adoption of Named Data Networking (NDN). NDN is an emerging Internet architecture that promises to increase network security, accommodate growing bandwidth requirements and simplify the creation of increasingly sophisticated applications. The NDN team’s goal is to build a replacement for Transmission Control Protocol/Internet Protocol (TCP/IP), the current underlying approach to all communication over the Internet. NDN leverages empirical evidence about what has worked on the Internet and what hasn’t, adapting to changes in usage over the past 30-plus years and simplifying the foundation for development of mobile platforms, smart cars and the Internet of Things—in which objects and devices are equipped with embedded software and are able to communicate with wireless digital networks.

View UCLA Data Science Press Release

September 2, 2014 — Genetic diversity of corn is declining in Mexico

The new study contradicts some earlier and more optimistic assessments of corn diversity in Mexico. Credit: Thinkstock photo

UCD 9/2/2014—The genetic diversity of maize, or corn, is declining in Mexico, where the world’s largest food crop originated, report researchers in Mexico and at UC Davis. The findings are particularly sobering at a time when agriculturists around the world are looking to the gene pools of staple foods like corn to dramatically increase food production for a global population expected to top 9 billion by 2050. The new study, which contradicts some earlier and more optimistic assessments of corn diversity in Mexico, appears online in the Proceedings of the National Academy of Sciences. This study—the first to examine changes in maize diversity across Mexico—compares maize diversity estimates from 38 case studies over the past 15 years with data from farmers throughout Mexico. “The question of diversity finally can be answered for maize, thanks to a unique database gathered through this binational project,” said lead author George A. Dyer of El Colegio de México, in Mexico City.

View UCD Data Science Press Release

August 27, 2014 — Encyclopedia of how genomes function gets much bigger

Berkeley Lab scientists contributed to an NHGRI effort that provides the most detailed comparison yet of how the genomes of the fruit fly, roundworm, and human function. Credit: Darryl Leja, NHGRI

LBNL 8/27/2014—A big step in understanding the mysteries of the human genome was taken in three analyses that provide the most detailed comparison yet of how the genomes of the fruit fly, roundworm, and human function. The research, appearing August 28 in the journal Nature, compares how the information encoded in the three species’ genomes is “read out,” and how their DNA and proteins are organized into chromosomes. The results add billions of entries to a publicly available archive of functional genomic data. Scientists can use this resource to discover common features that apply to all organisms. These fundamental principles will likely offer insights into how the information in the human genome regulates development, and how it is responsible for diseases.

View LBNL Data Science Press Release

August 26, 2014 — Existing power plants will spew 300 billion more tons of carbon dioxide during use

.jpg)

A coal-burning power plant at the Turceni Power Station in Romania. Credit: Robert and Mihaela Vicol

UCI 8/26/2014—Existing power plants around the world will pump out more than 300 billion tons of carbon dioxide over their expected lifetimes, significantly adding to atmospheric levels of the climate-warming gas, according to UC Irvine and Princeton University scientists. Using a new mathematical technique called commitment accounting, their study is the first to quantify how quickly these “committed” emissions are growing—by about 4 percent per year—as more fossil fuel-burning power plants are built. “These facts are not well known in the energy policy community, where annual emissions receive far more attention than future emissions related to new capital investments,” the paper states. The study was published in the August 26 issue of the journal Environmental Research Letters.

View UCI Data Science Press Release

August 27, 2014 — National Biomedical Computation Resource receives $9 million NIH award

National Biomedical Computation Resource director Rommie Amaro

SDSC 8/27/2014— The National Biomedical Computation Resource (NBCR) at UC San Diego has received $9 million in funding over five years from the National Institutes of Health (NIH) to allow NBCR to continue its work connecting biomedical scientists with supercomputing power and emerging information technologies. NBCR Director Rommie Amaro said that the renewed funding will make it possible for biomedical researchers to study phenomena from the molecular level to the level of the whole organ. Biomedical computation—which applies physical modeling and computer science to the field of biomedical sciences—is often a cheaper alternative to traditional experimental approaches and can speed the rate at which discoveries are made for host of human diseases and biological processes.

View SDSC Data Science Press Release

August 26, 2014 — Photon speedway puts big data In the fast lane

Scientists envision ESnet serving as a “photon science speedway” connecting Stanford’s advanced light sources with computing resources at Berkeley Lab.

LBNL/NERSC 8/26/2014—A series of experiments conducted by Lawrence Berkeley National Laboratory (LBNL) and SLAC National Accelerator Laboratory (SLAC) researchers and collaborators is shedding new light on photosynthesis, and demonstrating how light sources and supercomputing facilities can be linked via a “photon science speedway” called ESnet to better address emerging challenges in massive data analysis. Last year, LBNL and SLAC researchers led a protein crystallography experiment at SLAC’s Linac Coherent Light Source to look at the different photoexcited states of an assembly of large protein molecules that play a crucial role in photosynthesis. Subsequent analysis of the data on supercomputers at the Department of Energy’s (DOE’s) National Energy Research Scientific Computing Center (NERSC) helped explain how nature splits a water molecule during photosynthesis, a finding that could advance the development of artificial photosynthesis for clean, green and renewable energy.

View LBNL,NERSC Data Science Press Release

August 25, 2014 — New project is the ACME of addressing climate change

LBNL 8/25/2014—Eight Department of Energy national laboratories, including Lawrence Berkeley National Laboratory (LBNL), are combining forces with the National Center for Atmospheric Research, four academic institutions and one private-sector company in the new effort. Other participating national laboratories include Argonne, Brookhaven, Lawrence Livermore, Los Alamos, Oak Ridge, Pacific Northwest and Sandia. The project, called Accelerated Climate Modeling for Energy (ACME), is designed to accelerate the development and application of fully coupled, state-of-the-science Earth system models for scientific and energy applications. Over a planned 10-year span, the project aims to conduct simulations and modeling on the most sophisticated HPC machines as they become available, i.e., 100-plus petaflop machines and eventually exascale supercomputers.

View LBNL Data Science Press Release

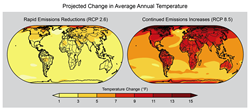

August 22, 2014 — NASA picks top Earth data challenge ideas, opens call for climate apps

OpenNEX Challenge Phase II. Credit: NASA

NASA Ames 8/22/2014—NASA has selected four ideas from the public for innovative uses of climate projections and Earth-observing satellite data. The agency also has announced a follow-on challenge with awards of $50,000 to build climate applications based on OpenNEX data on the Amazon cloud computing platform. Both challenges use the Open NASA Earth Exchange, or OpenNEX, a data, cloud computing, and knowledge platform where users can share modeling and analysis codes, scientific results, information and expertise to solve big data challenges in the Earth sciences. OpenNEX provides users a large collection of climate and Earth science satellite data sets, including global land surface images, vegetation conditions, climate observations and climate projections.

View AMES Data Science Press Release

August 21, 2014 — SDSC mourns the loss of J. Freeman Gilbert

J. Freeman Gilbert. Credit: Scripps Institution of Oceanography Archives, UC San Diego Library

SDSC 8/21/2014— In addition to being a Distinguished Professor of geophysics with the Scripps Institution of Oceanography (SIO) for more than 53 years, James Freeman Gilbert is being remembered by those at the San Diego Supercomputer Center (SDSC) for playing an integral role in securing a National Science Foundation (NSF) award to establish SDSC on the UC San Diego campus almost 30 years ago. A leading contributor in computational geophysics, seismology, earthquake sources, and geophysical inverse theory, Gilbert was the author of numerous research papers, book chapters, reviews, and other publications. He passed away on August 15, 2014 in Portland, Oregon, due to complications from a car accident. He was 83.

View SDSC Data Science Press Release

August 20, 2014 — Livermore researchers create engineered energy absorbing material

A silicone cushion with programmable mechanical energy absorption properties was produced through a 3D printing process using a silicone-based ink by Lawrence Livermore National Laboratory researchers.

LLNL 8/20/2014—Solid gels and porous foams are used for padding and cushioning, but each has its own advantages and limitations. Gels are effective but are relatively heavy and their lack of porosity gives them a limited range of compression. Foams are lighter and more compressible, but their performance is not consistent due to the inability to accurately control the size, shape and placement of voids (air pockets) when the foam is manufactured. Now, with the advent of additive manufacturing—also known as 3D printing—a team of engineers and scientists at Lawrence Livermore National Laboratory (LLNL) has found a way to design and fabricate, at the microscale, new cushioning materials with a broad range of programmable properties. LLNL researchers constructed cushions using two different configurations, and were able to model and predict the performance of each of the architectures under both compression and shear. “The ability to dial in a predetermined set of behaviors across a material at this resolution is unique, and it offers industry a level of customization that has not been seen before,” said the lead author of a paper published in the August 20 issue of Advanced Functional Materials.

View LLNL Data Science Press Release

August 19, 2014 — New project is the ACME of addressing climate change

A massive crack runs about 29 kilometers (18 miles) across the Pine Island Glacier’s floating tongue, marking the moment of creation for a new iceberg that will span about 880 square kilometers (340 square miles) once it breaks loose from the glacier. The onset of the collapse of the Antarctic Ice Sheet is one area a new project headed by Lawrence Livermore will examine. Credit: Quantum Filmmakers

LLNL 8/19/14—High performance computing (HPC) will be used to develop and apply the most complete climate and Earth system model to address the most challenging and demanding climate change issues. Eight national laboratories, including Lawrence Livermore (LLNL), are combining forces with the National Center for Atmospheric Research, four academic institutions and one private-sector company in the new effort. Other participating national laboratories include Argonne, Brookhaven, Lawrence Berkeley (LBNL), Los Alamos (LANL), Oak Ridge, Pacific Northwest and Sandia. The project, called Accelerated Climate Modeling for Energy (ACME), is designed to accelerate the development and application of fully coupled, state-of-the-science Earth system models for scientific and energy applications. The plan is to exploit advanced software and new high performance computing machines as they become available. The initial focus will be on three climate change science drivers (the water cycle, biogeochemistry, and the cryosphere) and corresponding questions.

View LLNL Data Science Press Release

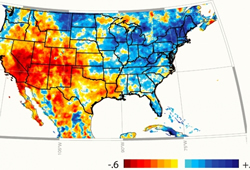

August 19, 2014 — California is deficit-spending its water

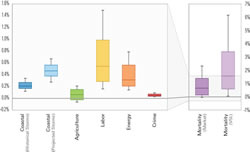

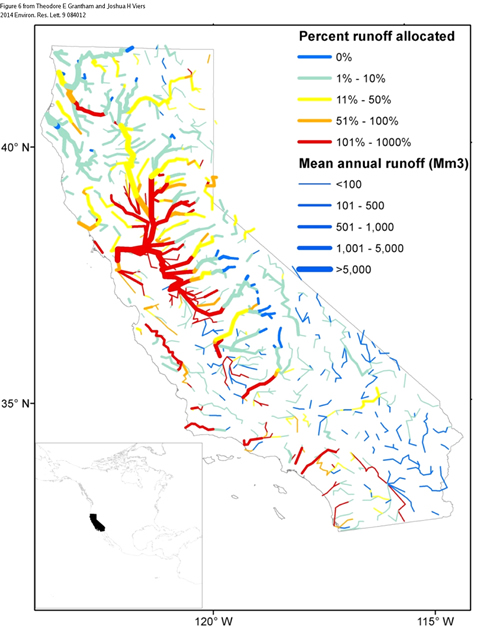

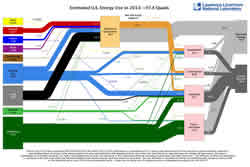

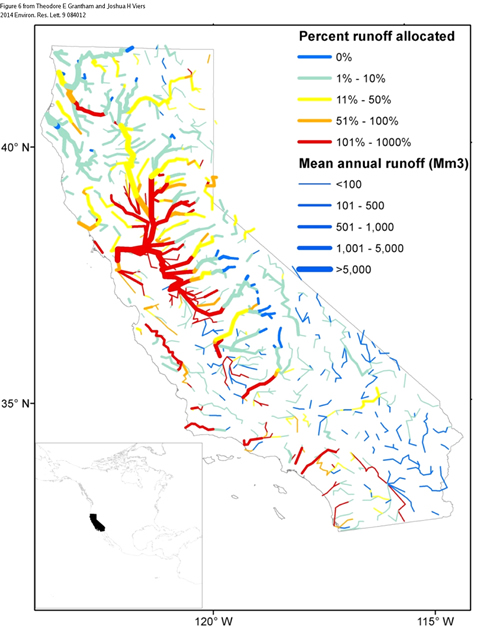

Cumulative water right allocations relative to mean annual runoff, excluding water rights for hydropower generation

UCM 8/19/2014—California is deficit-spending its water and has been for a century, according to state data analyzed recently by researchers from the University of California. UC Merced professor Joshua Viers and postdoctoral researcher Ted Grantham, with UC Davis at the time, explored the state’s database of water-rights allocations, and found that allocations in California since 1914 exceed the state's actual water supply by five times the average annual runoff and 100 times the actual surface-water supply for some river basins. “We’re kind of in big trouble,” Viers said, considering the changing climate and the expectation that more frequent, multi-year droughts are the new normal. The analysis, entitled “100 years of California’s Water Rights System: Patterns, Trends and Uncertainty,” by Viers, the director of The Center for Information Technology Research in the Interest of Society (CITRIS) at UC Merced, and Grantham, now a scientist with the U.S. Geological Survey, was published in the August 19 issue of the journal

Environmental Research Letters.

View UCM Data Science Press Release

August 19, 2014 — California has given away rights to far more water than it has

On average, California allocates more than five times the amount of water to users than is available in its rivers and streams. Credit: Josh Viers/UC Merced

UCD 8/19/2014—California has allocated five times more surface water than the state actually has, making it hard for regulators to tell whose supplies should be cut during a drought, reported researchers from two UC campuses. The scientists said California’s water-rights regulator, the State Water Resources Control Board, needs a systematic overhaul of policies and procedures to bridge the gaping disparity, but lacks the legislative authority and funding to do so. Ted Grantham, who conducted the analysis with UC Merced professor Joshua Viers and who explored the state’s water-rights database as a postdoctoral researcher with the UC Davis Center for Watershed Sciences, said the time is ripe for tightening the water-use accounting. Viers and Grantham, now with the U.S. Geological Survey, are working to iron out issues with its database and make the information available to policymakers.

View UCD Data Science Press Release

August 18, 2014 — SPOT Suite transforms beamline science

Advanced Light Source (ALS) at Berkeley Lab. Credit: Roy Kaltschmidt

LBNL/NERSC 8/18/2014—For decades, synchrotron light sources such as the DOE’s Advanced Light Source (ALS) at LBNL have been operating on a manual grab-and-go data management model—users travel thousands of miles to run experiments at the football-field-size facilities, download raw data to an external hard drive, then process and analyze the data on their personal computers, often days later. But the deluge of data brought on by faster detectors and brighter light sources is quickly making this practice implausible. Fortunately, ALS X-ray scientists, facility users, computer and computational scientists from the Computational Research Division (CRD) and National Energy Research Scientific Computing Center (NERSC) of Lawrence Berkeley National Laboratory (LBNL) recognized this developing situation years ago and teamed up to create new tools for reducing, managing, analyzing and visualizing beamline data. The result of this collaboration is SPOT Suite, which is already transforming the way scientists run their experiments at the ALS. The goal is for beamline scientists to be able to access computational resources without having to become computer experts.

View LBNL,NERSC Data Science Press Release

August 14, 2014 — New tool makes a single picture worth a thousand — and more — images