HiPACC Data Science Press Room

The Data Science Press Room highlights computational and data science news in all fields *outside of astronomy* in the UC campuses and DOE laboratories comprising the UC-HiPACC consortium. The wording of the short summaries on this page is based on wording in the individual releases or on the summaries on the press release page of the original source. Images are also from the original sources except as stated. Press releases below appear in reverse chronological order (most recent first); they can also be displayed by UC campus or DOE lab by clicking on the desired venue at the bottom of the left-hand column. Items with this symbol include a video clip:

November 6, 2014 — New software platform bridges gap in precision medicine for cancer

UCSF 11/6/2014—UC San Francisco has unveiled a new cloud-based software platform that significantly advances precision medicine for cancer. Built in partnership with Palo Alto-based company Syapse, the new platform seamlessly unites genomic testing and analysis, personalized treatment regimens, and clinical and outcomes data, crucially integrating all of these features directly into UCSF’s Electronic Medical Record (EMR) system. The project was a collaborative venture of UCSF’s Genomic Medicine Initiative (GMI) and the UCSF Helen Diller Family Comprehensive Cancer Center.

Read full UCSF press release.

November 5, 2014 — Coexist or perish

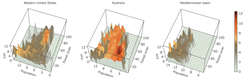

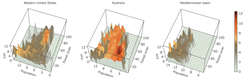

Relationship between forest cover, population density and area burned in fire-prone regions. Forest cover is the percentage area covered by trees higher than 5 meters per cell in 2000; population is number of people per cell (log transformed) in 2000; and fire is total area burned in hectares per cell (log transformed) between 1996 and 2012. The color scale for fire is to help differentiate higher peaks in area burned.

UCB/UCSB 11/5/2014—Many fire scientists have tried to get Smokey the Bear to hang up his “prevention” motto in favor of tools such as thinning underbrush and prescribed burns, which can manage the severity of wildfires while allowing them to play their natural role in certain ecosystems. But a new international research review—led by a specialist in fire at UC Berkeley’s College of Natural Resources and an associate with UC Santa Barbara’s National Center for Ecological Analysis and Synthesis—calls for changes in our fundamental approach to wildfires: from fighting fire to coexisting with fire as a natural process.“We don’t try to ‘fight’ earthquakes: we anticipate them in the way we plan communities, build buildings and prepare for emergencies,” explained lead author Max A. Moritz, UCB. “Human losses will be mitigated only when land-use planning takes fire hazards into account in the same manner as other natural hazards such as floods, hurricanes and earthquakes.” The review examines research findings from three continents and from both the natural and social sciences. The authors conclude that government-sponsored firefighting and land-use policies actually incentivize development on inherently hazardous landscapes, amplifying human losses over time. The findings were published in

Nature.

Read full UCB release.

Read full UCSB release.

November 4, 2014 — How important is long-distance travel in the spread of epidemics?

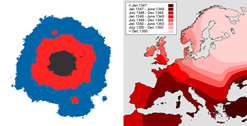

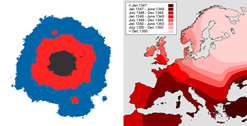

When long-distance travel is rare, epidemics spread like a slow, rippling wave, as demonstrated by the simulation (left) and the actual historical spread of the Black Death during the Middle Ages. Credit: Oskar Hallatschek, D. Sherman, and J. Salisbury

UCB 11/4/2014—The current Ebola outbreak shows how quickly diseases can spread with global jet travel. Yet knowing how to predict the spread of these epidemics is still uncertain, because the complicated models used are not fully understood, says a UC Berkeley biophysicist. Using a very simple model of disease spread, he proved that one common assumption is actually wrong. Most models have taken for granted that if disease vectors, such as humans, have any chance of “jumping” outside the initial outbreak area—by plane or train, for example—the outbreak quickly metastasizes into an epidemic. On the contrary, simulations showed that if the chance of individuals traveling away from the center of an outbreak drops off exponentially with distance—for example, if the chance of distant travel drops by half every 10 miles—the disease spreads as a relatively slow wave. But simulations also suggested that a slower “power-law” drop off—for example, if the chance of distant travel drops by half every time the distance is doubled—would let the disease get quickly out of control. This new understanding of a simple computer model of disease spread will help epidemiologists understand the more complex models now used to predict the spread of epidemics, he said, but also help scientists understand the spread of cancer metastases, genetic mutations in animal or human populations, invasive species, wildfires, and even rumors. The paper appears in Proceedings of the National Academy of Sciences.

Read full UCB press release.

November 3, 2014 — Mathematical models shed new light on cancer mutations

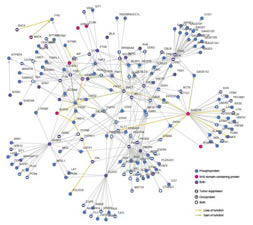

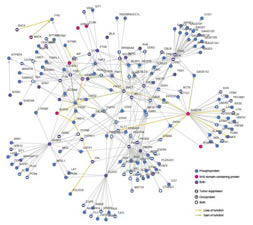

Kidney tumor network, generated using statistical mechanics, the Mathematica software package, and data from the Cancer Genome Atlas. Credit: M. AlQuraishi, et al./Harvard Medical School

NERSC 11/3/2014—A team of researchers from Harvard Medical School, using computing resources at the U.S. Department of Energy’s National Energy Research Scientific Computing Center (NERSC), have demonstrated a mathematical toolkit that can turn cancer-mutation data into multidimensional models to show how specific mutations alter the social networks of proteins in cells. From this they can deduce which mutations among the myriad mutations present in cancer cells might actually play a role in driving disease. At the core of this approach is an algorithm based on statistical mechanics, a branch of theoretical physics that describes large phenomena by predicting the macroscopic properties of its microscopic components. The authors used its core principles as the basis for a platform that would analyze information housed in the National Cancer Institute’s Cancer Genome Atlas. In doing so, they stumbled upon a few unexpected findings, published November 2 in

Nature Genetics.

Read full NERSC press release.

November 3, 2014 — Berkeley Lab scientists ID new driver behind Arctic warming

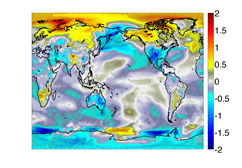

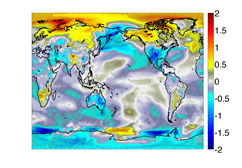

This simulation, from the Community Earth System Model, shows decadally averaged radiative surface temperature changes during the 2030s after far-infrared surface emissivity properties are taken into account. The right color bar depicts temperature change in Kelvin. Credit: LBNL

LBNL 11/3/2014—Research led by Lawrence Berkeley National Laboratory studying the far infrared have identified a mechanism that could turn out to be a big contributor to warming in the Arctic region and melting sea ice. Far infrared (15 to 100 micrometers) emits about half the energy radiated by the Earth’s surface, balancing out incoming solar energy. Despite its importance in the planet’s energy budget, it’s difficult to measure a surface’s effectiveness in emitting far-infrared energy. In addition, its influence on the planet’s climate is not well represented in climate models. The models assume that all surfaces are 100 percent efficient in emitting far-infrared energy. However, the research found that non-frozen surfaces are poor emitters compared to frozen surfaces. Specifically, open oceans are much less efficient than sea ice when it comes to emitting in the far-infrared region of the spectrum. That discrepancy has a much bigger impact on the polar climate than today’s models indicate. This means that the Arctic Ocean traps much of the energy in far-infrared radiation, a previously unknown phenomenon that is likely contributing to the warming of the polar climate. Simulations revealed something similar also may happen in arid regions, such as the Tibetan plateau. Their research appears in the

Proceedings of the National Academy of Sciences.

Read full LBNL press release.

November 3, 2014 — NCI Cloud Pilot program to boost cancer genomics data sharing, accessibility

David Haussler, Director of the UC Santa Cruz Genomics Institute

UCSC 11/3/2014—The UC Santa Cruz Genomics Institute is part of a team led by the Broad Institute of Harvard and Massachusetts Institute of Technology that was awarded one of three National Cancer Institute (NCI) Cancer Genomics Cloud Pilot contracts. The goal of the project, which also involves scientists at UC Berkeley, is to build a system that will enable large-scale analysis of The Cancer Genome Atlas (TCGA) and other datasets by co-locating the data and the required computing resources in one cloud environment. This co-location will enable researchers across institutions to bring their analytical tools and methods to use on data in an efficient, cost-effective manner, thereby promoting democratization and collaboration across the cancer genomics community. The impetus for the cancer genomics cloud pilots grew from an inquiry the NCI posed in April 2013 asking the NCI grantee community to describe their most frequent computational challenges. From these responses, six general themes emerged: data access, computing capacity and infrastructure, data interoperability, training, usability, and governance.

Read full UCSC press release.

November 3, 2014 — Explosives performance key to stockpile stewardship

Adam Pacheco of shock and detonation physics presses the “fire” button during an experiment at the two-stage gas gun facility

LANL 11/3/2014— As U.S. nuclear weapons age, one essential factor in making sure that they will continue to perform as designed is understanding the fundamental properties of the high explosives that are part of a nuclear weapons system. A new video on the Los Alamos National Laboratory YouTube Channel (

https://www.youtube.com/watch?v=uiSJOuRM_WE&feature=youtu.be) shows how researchers use scientific guns to induce shock waves into explosive materials to study their performance and properties. Explosives research includes small, contained detonations, gas and powder gun firings, larger outdoor detonations, large-scale hydrodynamic tests, and at the Nevada Nuclear Security Site, underground sub-critical experiments. In small-scale experiments, scientists are also looking at very basic materials such as ammonia and methane which are of interest in planetary physics, and simple molecules like benzene to better understand chemical reactivity at pressures that exceed those at the center of the Earth. Ultimately, the data from both small- and large-scale experiments are used to validate and improve computer models and simulations of the highly integrated, complex systems that comprise a nuclear weapon, enhancing confidence in the U.S. Nuclear Deterrent without the need for full-scale nuclear testing.

Read full LANL press release.

October 31, 2014 — $9 million gift from alumnus B. John Garrick launches Institute for the Risk Sciences at UCLA Engineering

B. John Garrick. Credit: Garrick family

UCLA 10/31/2014— UCLA 10/31/2014—UCLA engineering alumnus B. John Garrick and his wife, Amelia Garrick, have committed $9 million to launch the B. John Garrick Institute for the Risk Sciences at the UCLA Henry Samueli School of Engineering and Applied Science. The Garrick Institute will provide new knowledge and technology to assess and manage risks in order to save lives, protect the environment, and protect property from large-scale threats. The institute will improve preparation and response to threats including earthquakes, volcano eruptions, tsunamis, shifts caused by climate change, and the consequences associated with major accidents at industrial plants. It also will focus on resilience and reliability engineering, fields dedicated to preventing failures of complex systems and managing major disruptions. The institute will build on Garrick’s pioneering work in the discipline. For more than 50 years, he has played a leadership role in developing tools for risk analysis, probability theory, systems engineering and related fields. In 1993, he was elected to the National Academy of Engineering, the highest honor for a U.S. engineer. Garrick is the author of the book

Quantifying and Controlling Catastrophic Risks.

Read full UCLA press release.

October 29, 2014 — SDSC’s Chaitan Baru named CISE Data Science Advisor at NSF

Chaitan Baru. Credit: Alan Decker

SDSC 10/29/2014—The National Science Foundation (NSF) has named Chaitan Baru, a Distinguished Scientist at the San Diego Supercomputer Center (SDSC) at UC San Diego, Senior Advisor for Data Science in the agency’s Computer and Information Science and Engineering Directorate (CISE). Baru was Associate Director for Data Initiatives at SDSC, and is now on assignment in his new position with the NSF in the greater Washington DC area. He will serve as advisor to the CISE Directorate, assisting in cross-directorate and interagency efforts pertaining to what has become known as ‘Big Data’, or what the NSF describes as “large, diverse, complex, longitudinal, and/or distributed datasets generated from instruments, sensors, Internet transactions, email, video, click streams, and/or all other digital sources available today and in the future.” CISE, one of seven NSF directorates, supports investigator-initiated research in all areas of computer and information science and engineering and fosters broad interdisciplinary collaboration.

Read full SDSC press release.

October 29, 2014 — Using radio waves to control fusion plasma density

Supercomputer simulation shows turbulent density fluctuations in the core of the Alcator C-Mod tokamak during strong electron heating. Credit: Darin Ernst/MIT

NERSC 10/29/2014—Recent fusion experiments on the DIII-D tokamak at General Atomics and the Alcator C-Mod tokamak at Massachusetts Institute of Technology (MIT) showed that beaming microwaves into the center of the plasma can be used to control the density in the center of the plasma, where a fusion reactor would produce most of its power. Several megawatts of microwaves mimic the way fusion reactions would supply heat to plasma electrons to keep the “fusion burn” going. The new experiments reveal that turbulent density fluctuations in the inner core intensify when most of the heat goes to electrons instead of plasma ions, as would happen in the center of a self-sustaining fusion reaction. Supercomputer simulations run at the Department of Energy’s National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory closely reproduced the experiments, showing that the electrons become more turbulent as they are more strongly heated and that this turbulence transports both particles and heat out of the plasma. The findings were presented October 27 at the 2014 American Physical Society Division of Plasma Physics Meeting. These experiments are part of a larger systematic study of turbulent energy and particle loss under fusion-relevant conditions.

Read full NERSC press release.

October 29, 2014 — UC Santa Cruz offers ‘Genome Browser in a Box’ for local installations

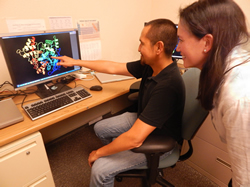

Members of the UCSC Genome Browser team

UCSC 10/29/2014—Researchers at the UC Santa Cruz Genomics Institute have just made it easier to install a copy of the popular UCSC Genome Browser on a private computer. The browser has always been readily accessible online, providing a variety of tools for studying genome sequences. But some users require a local installation so they can use the UCSC Genome Browser with private or proprietary data. A new product, called the “Genome Browser in a Box,” enables individual scientists to install the browser on their own computers in about an hour. Previously, local installation of the browser was a laborious process requiring large amounts of server space. The UCSC Genome Browser is an efficient way to access and visualize publicly available information associated with particular genome sequences. The web-based browser is a portal to a database containing the genomes of about 100 species, including human, mouse, and fruit fly.

Read full UCSC press release.

October 28, 2014 — NASA’s Pleiades supercomputer upgraded, gets one petaflops boost

The addition of SGI ICE X racks containing Intel Xeon Ivy Bridge and Haswell processors in 2014 increased Pleiades' theoretical peak processing power from 2.88 petaflops to 4.5 petaflops.

NASA Ames 10/28/2014—NASA’s flagship supercomputer, Pleiades, was recently upgraded with new, more powerful hardware in order to help meet the space agency’s increasing demand for high-performance computing resources. Completed in October 2014, the upgrade adds 15 SGI ICE X racks (1,080 nodes) containing the latest generation of 12-core Intel Xeon E5-2680v3 (Haswell) processors. Additionally, 216 nodes of Intel Xeon E5-2680v2 (Ivy Bridge) processors, originally part of a separate test system, were also integrated into Pleiades. The new hardware increased Pleiades' theoretical peak performance to more than 4.49 petaflops (quadrillion floating-point operations per second), a one petaflops increase from the previous configuration.

Read full AMES press release.

October 27, 2014 — Where did all the oil go?

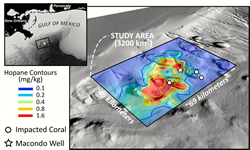

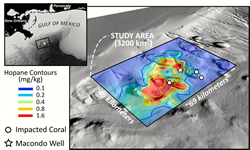

Hydrocarbon contamination from Deepwater Horizon overlaid on sea floor bathymetry, highlighting the 1,250 square mile area identified in the study.

UCSB 10/27/2014—Assessing the damage caused by the 2010 Deepwater Horizon spill in the Gulf of Mexico has been a challenge due to the environmental disaster’s unprecedented scope,. One unsolved puzzle is the location of 2 million barrels of submerged oil thought to be trapped in the deep ocean. UC Santa Barbara’s David Valentine and colleagues from the Woods Hole Oceanographic Institute (WHOI) and UC Irvine have been able to describe the path the oil followed to deposit in a 1,250-square-mile patch on the deep ocean floor. Their findings appear in the

Proceedings of the National Academy of Sciences. For this study, the scientists used data from the Natural Resource Damage Assessment process conducted by the National Oceanic and Atmospheric Administration. The United States government estimates the Macondo well’s total discharge—from the spill in April 2010 until the well was capped that July—to be 5 million barrels. The findings suggest that the undersea deposits came from Macondo oil that was first suspended in the deep ocean and then rained down 1,000 feet to settle on the sea floor without ever reaching the ocean surface.

Read full UCSB press release.

October 27, 2014 — Novel biosensor technology could allow rapid detection of Ebola virus

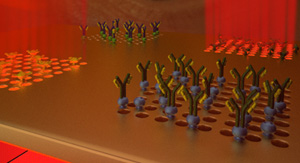

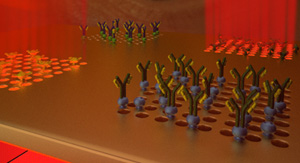

Proposed Ebola virus biosensor: The transmission of light through plasmonic nanohole arrays changes with the binding of specific proteins or virus particles.

UCSC 10/27/2014—Ali Yanik, assistant professor of electrical engineering, is focused on creating a low-cost biosensor that can be used to detect specific viruses without the need for skilled operators or expensive equipment. “We need a platform for virus detection that is like the pregnancy tests you can use at home,” Yanik said. He is focusing on the Lassa virus. Related to Ebola, it also causes hemorrhagic fever, which infects nearly half a million people every year in Africa and kills more people than Ebola, although it doesn’t make the news. Another major focus is the detection and isolation of circulating tumor cells in the blood of cancer patients, which spread cancer to other parts of the body (metastasis); thus, detecting them in blood samples can have important prognostic and therapeutic implications.

Read full UCSC press release.

October 27, 2014 — Marvin L. Cohen to receive the Materials Research Society’s highest honor

Marvin L. Cohen, 2014 Von Hippel Award recipient

UCB 10/27/2014— The 2014 Von Hippel Award, the Materials Research Society’s highest honor, will be presented to Marvin L. Cohen, University Professor of Physics at UC Berkeley and senior scientist in the Materials Sciences Division of the Lawrence Berkeley National Laboratory. Cohen is being recognized for “explaining and predicting properties of materials and for successfully predicting new materials using microscopic quantum theory.” Cohen is recognized internationally as the leading expert in theoretical calculations of the ground-state properties and elementary excitations of real materials systems. Prior to his seminal work, theoretical studies of solids involved idealized models, but Cohen moved the field toward accurate calculations of real materials, leading to physical theories and computational approaches capable of describing and predicting basic properties of materials.

Read full UCB press release.

October 26, 2014 — Faster switching helps ferroelectrics become viable replacement for transistors

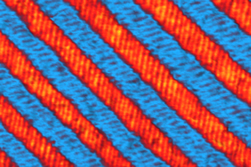

The herringbone pattern of nanoscale domains is key to enabling faster switching in ferroelectric materials. For scale, each tiny domain is only about 40 nanometers wide. Each colored band is made up of many tiny domains. Credit: Ruijuan Xu and Lane W. Martin/UC Berkeley

UCB 10/26/2014— Ferroelectric materials—commonly used in transit cards, gas grill igniters, video game memory, and other applications—could become strong candidates for use in next-generation computers. Because ferroelectrics are non-volatile, they can remain in one polarized state or another without power; that is, information would be retained even if electricity goes out and then is restored, so people wouldn’t lose their data if the power goes off. What has held ferroelectrics back from wider use as on/off switches in integrated circuits? Speed. Now, thanks to new research led by scientists at UC Berkeley and the University of Pennsylvania, an easy way to improve the performance of ferroelectric materials makes them viable candidates for low-power computing and electronics. Their study was published October 26 in

Nature Materials.

Read full UCB press release.

October 23, 2014 — Water and gold: A promising mix for future batteries

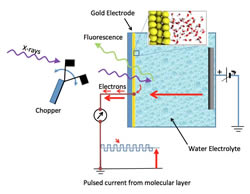

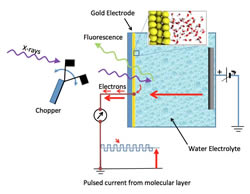

Schematic of the gold-water electrochemical cell under testing conditions

LBNL/NERSC 10/23/2014—For the first time, researchers at the Lawrence Berkeley National Laboratory (LBNL) have observed the molecular structure of liquid water at a gold surface under different charging conditions as in a battery. These experiments result in absorption vs. x-ray energy curves (spectra) that reflect how water molecules within nanometers of the gold surface absorb the x-rays. To translate that information into molecular structure, the team also developed computational techniques. Supercomputer facilities at LBNL’s National Energy Research Scientific Computing Center (NERSC), conducted large molecular dynamics simulations of the gold-water interface and then predicted the x-ray absorption spectra of representative structures from those simulations. It turns out that the inert gold surface can induce significant numbers of water molecules not to hydrogen-bond to each other but to bond to the gold instead. The findings were published October 23 in

Science.

LBNL release

NERSC release

October 23, 2014 — Desert streams: Deceptively simple

Dryland channels exhibit very simple topography despite being shaped by volatile rainstorms. Credit: Katerina Michaelides

UCSB 10/23/2014— Rainstorms drive complex landscape changes in deserts, particularly in dryland channels, which are shaped by flash flooding. Paradoxically, such desert streams have surprisingly simple topography with smooth, straight and symmetrical form that until now has defied explanation. That paradox has been resolved in newly published research by two researchers at UC Santa Barbara’s Earth Research Institute, who modeled dryland channels using data collected from the semi-arid Rambla de Nogalte in southeastern Spain. The pair show that simple topography in dryland channels is maintained by complex interactions among rainstorms, the stream flows the storms generate in the river channel, and sediment grains present on the riverbed. They found that dryland channel width fluctuates downstream. Their observations show that grain size (roughness) also fluctuates from sand to gravel a downstream direction. They also produced simulations of extreme flows to determine the volume of flow necessary to reshape the channel completely. Their findings appear today in the journal

Geology.

Read full UCSB press release.

October 23, 2014 — One giant step for ocean diversity

Scientists at UCSB’s Marine Science Institute use this quad pod camera array to capture underwater images of marine habitats. Credit: Spencer Bruttig

UCSB 10/23/2014—The marine Biodiversity Observation Network (BON) centered on the Santa Barbara Channel where various groups have been gathering a breadth of scientific data ranging from intertidal monitoring to physical oceanographic measurements. Existing diversity data streams will be augmented with new technology and compiled into a comprehensive dataset on species richness. Funded with $5 million by NASA, the Bureau of Ocean Energy Management (BOEM) and the National Oceanic and Atmospheric Administration (NOAA), and led by UC Santa Barbara, the prototype marine BON is designed to fill a gap not addressed by NASA’s own Group on Earth Observations (GEO) BON. The prototype marine BON, which focuses on terrestrial biomes, seeks to eventually cover a huge range of biodiversity in the oceans. Ultimately, the body of knowledge it amasses will mesh with essential biodiversity variables (EBVs), the metrics adopted by NASA’s GEO BON to measure biodiversity across the Earth. In this way, the measurements generated through the marine BON may one day be part of a global assessment of biodiversity.

Read full UCSB press release.

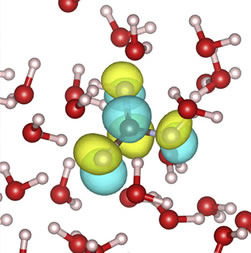

October 23, 2014 — Probing the surprising secrets of carbonic acid

Although carbonic acid exists for only a fraction of a second before changing into a mix of hydrogen and bicarbonate ions, it is critical to both the health of the atmosphere and the human body.

NERSC 10/23/2014—When carbon dioxide dissolves in water, about 1 percent of it forms carbonic acid, which almost immediately dissociates to bicarbonate anions and protons. Despite its fleeting existence—about 300 nanoseconds—carbonic acid is a crucial intermediate species in the equilibrium between carbon dioxide, water, and many minerals. In biology it is important in the buffering of blood and other bodily fluids; in geology, it plays a crucial role in the carbon cycle—the exchange of carbon dioxide between the atmosphere and the oceans. The short life span of carbonic acid in water has made it extremely difficult to study. Now Lawrence Berkeley National Laboratory researchers have produced the first X-ray absorption spectroscopy (XAS) measurements for aqueous carbonic acid. The XAS measurements, obtained at LBNL’s Advanced Light Source (ALS), were strongly agreed with supercomputer predictions obtained at the National Energy Research Scientific Computing Center (NERSC). The combination of theoretical and experimental results provides new and detailed insights that should benefit the development of carbon sequestration (storing atmospheric carbon dioxide underground) and mitigation technologies and improve our understanding of how carbonic acid regulates the pH of blood. The study findings were published in

Chemical Physical Letters.

Read full NERSC press release.

October 22, 2014 — New tool identifies high-priority dams for fish survival

Long Valley Dam on the Owens River is one of 181 California dams UC Davis researchers identified as candidates for increased water flows to protect native fish downstream. Credit: Stephen Volpin

UCD 10/22/2014—Scientists from UC Davis and UC Merced have identified 181 California dams that may need to increase water flows to protect native fish downstream. The screening tool developed by UC Davis’s Center for Watershed Sciences to select “high-priority” dams may be particularly useful during drought years amid competing demands for water. The study started with an analysis of 1,400 Californian dams in several databases, and focused on evaluating 753 large dams, screening them for evidence of altered water flows and damage to fish. Nearly a quarter of them—181—were identified as having flows that may be too low to sustain healthy fish populations. A 2011 study found that 80 percent of California’s native fish are at risk of extinction if present trends continue. “It is unpopular in many circles to talk about providing more water for fish during this drought, but to the extent we care about not driving native fish to extinction, we need a strategy to keep our rivers flowing below dams,” said lead author Ted Grantham, a postdoctoral researcher at UC Davis during the study and currently a research scientist with the U.S. Geological Survey. Findings were published October 15 in the journal

BioScience.

Read full UCD press release.

October 22, 2014 — UCSF team awarded multimillion-dollar agreement with CDC

.jpg)

UCSF 10/22/2014—A UC San Francisco-based consortium has been awarded a multimillion-dollar, five-year cooperative agreement with the U.S. Centers for Disease Control and Prevention (CDC) to conduct economic modeling of disease prevention in five areas: HIV, hepatitis, STI (sexually transmitted infections), TB (tuberculosis), and school health. Called the Consortium for the Assessment of Prevention Economics (CAPE), it will conduct economic analyses including costing, cost-effectiveness analysis, cost-benefit analysis, resource allocation, and return on investment. CAPE—led by James G. Kahn, MD, MPH, and Paul Volberding, MD, both faculty in Global Health Sciences—includes 39 investigators from UCSF; Stanford University; UC Berkeley, UC Davis, UC San Diego; the San Francisco Department of Public Health, Health Strategies International, and PATH. CAPE will be based at the UCSF Philip R. Lee Institute for Health Policy Studies; it was awarded $1.6 million for the first year, and $8 million over the full project period.

Read full UCSF press release.

October 21, 2014 — NAS Hyperwall visualization system upgraded with Ivy Bridge nodes

The hyperwall’s 128 nodes are connected to a 128-screen tiled LCD wall at the NAS facility, arranged in an 8x16 configuration measuring 23-ft. wide by 10-ft. high. The nodes can display, process, and share data among themselves, allowing the system to display a single image across all the screens or to display configurations of data in “cells.” Credit: NASA/Ames

NASA Ames 10/21/2014—The hyperwall at the NASA Advanced Supercomputing (NAS) Division at NASA Ames Research Center is one of the largest and most powerful visualization systems in the world, providing a supercomputer-scale environment for NASA researchers to display and analyze high-dimensional datasets in a multitude of ways. And it just got better. Systems and visualization engineers increased the hyperwall’s peak processing power from 9 to 57 teraflops, quadrupled the amount of memory, and nearly tripled the interconnect bandwidth, while simultaneously decreasing the system's physical footprint and overall power consumption. This improved visualization capability will enable more detailed analysis of simulation results produced on the NAS facility's supercomputers, enhancing the ability of scientists and engineers to gain greater insight from their research. It will also help meet the challenges associated with analyzing the growing archive of high-resolution observational data gathered from NASA instruments such as telescopes, Earth-observing satellites, and planetary rovers.

Read full AMES press release.

October 20, 2014 — Physicists solve longstanding puzzle of how moths find distant mates

Moths from the species Bombyx mori (the domesticated silk moth whose larvae caterpillars are the China silkworm) were used for the study

UCSD 10/20/2014—How male moths locate females flying hundreds of meters away has long mystified scientists. Researchers know the moths use pheromones. Yet when those chemical odors are widely dispersed in a windy, turbulent atmosphere, the insects still manage to fly in the right direction over hundreds of meters with only random puffs of their mates’ pheromones spaced tens of seconds apart to guide them. Three physicists have now come up with a mathematical explanation for the moths’ remarkable ability, published in the October issue of

Physical Review X. They developed a statistical approach to trace the evolution of trajectories of fluid parcels in a turbulent airflow, which then allowed them to come up with a generalized solution to determine the signal that the moths sense while searching for food, mates, and other things necessary for survival. UCSD physics professor Massimo Vergassola, initially trained in statistical physics and now working at the intersection of biology and physics in a mathematical discipline called “quantitative biology,” said the results could be applied widely in agriculture or robotics.

Read full UCSD press release.

October 20, 2014 — DOE’s high-speed network to boost Big Data transfers by extending 100G connectivity across Atlantic

LBNL 10/20/2014— The Department of Energy’s (DOE’s) Energy Sciences Network, or ESnet, is deploying four new high-speed transatlantic links, using network capacity leased from the owners of four undersea cable, giving researchers at America’s national laboratories and universities ultra-fast access to scientific data from the Large Hadron Collider (LHC) and other research sites in Europe. ESnet’s transatlantic extension will deliver a total capacity of 340 gigabits-per-second (Gbps), and serve dozens of scientific collaborations. To maximize the resiliency of the new infrastructure, ESnet equipment in Europe will be interconnected by dedicated 100 Gbps links from the pan-European networking organization GÉANT. Funded by the DOE’s Office of Science and managed by Lawrence Berkeley National Laboratory, ESnet provides advanced networking capabilities and tools to support U.S. national laboratories, experimental facilities and supercomputing centers. Among the first beneficiaries will be particle physicists, but significant network traffic across the Atlantic is also expected from other such data-intensive fields as astrophysics, materials science, genomics, and climate science.

Read full LBNL press release.

October 20, 2014 — Campus mourns the loss of David Wessel, pioneer in music and science

UCB 10/20/2014—David L. Wessel, UC Berkeley professor and a groundbreaking researcher, scholar, and performing artist who thrived in the intersections of music and science, died on October 13 at age 72. Wessel’s early research on the musical role of psychoacoustics—a branch of science that studies psychological and physiological responses associated with sound—laid the foundations for much of his career. His work in the 1970s inspired the creation of some of the first computer software for analyzing, understanding, and using musical material. He championed the use of personal computers for music research and creation while director of pedagogy and software development at IRCAM, an important French institute for research into the science of music and sound and avant-garde electroacoustical art music. He conducted pioneering research in music perception, audio signal processing, and computer music, musical applications of machine learning and neural networks, the design and use of new musical instruments, novel approaches to analysis and synthesis of musical material, and communication protocols for electronic musical devices. He also mentored UC Berkeley students in music, computer science, engineering, statistics, and psychology.

Read full UCB press release.

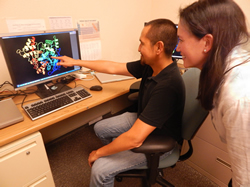

October 20, 2014 — Supercomputers link proteins to drug side effects

LLNL researchers Monte LaBute (left) and Felice Lightstone (right) were part of a team that published an article in PLOS ONE detailing the use of supercomputers to link proteins to drug side effects. Credit: Julie Russell/LLNL

LLNL 10/20/2014—New medications have helped millions of Americans. But the drug creation process often misses many adverse drug reactions (ADRs) or side effects that kill at least 100,000 patients a year. Because it is cost-prohibitive to experimentally test a drug candidate against all proteins, pharmaceutical companies usually test only a minimal set of proteins during the early stages of drug discovery. As a result, ADRs remain undetected through the later stages of drug development and clinical trials, possibly making it to the marketplace. Now, Lawrence Livermore National Laboratory (LLNL) researchers have discovered a high-tech method of testing for ADRs outside of a laboratory: using supercomputers and information from public databases of drug compounds and proteins in an algorithm that produces reliable data to identify proteins that cause medications to have certain ADRs. The LLNL team showed that in two categories of disorders—vascular disorders and neoplasms—their computational model of predicting side effects in the early stages of drug discovery using off-target proteins was more predictive than current statistical methods. The team published its findings in the journal

PLOS ONE.

Read full LLNL press release.

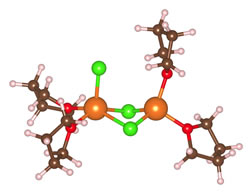

October 16, 2014 — Dispelling a misconception about Mg-ion batteries

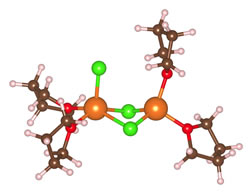

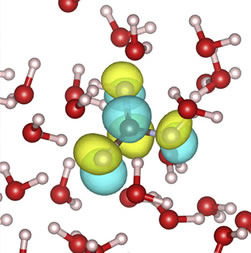

Using first-principles molecular dynamics simulations, LBNL researchers found that Mg-ions (orange) are coordinated by only four or five nearest neighbors in the dichloro-complex electrolyte rather than six as was widely believed.

LBNL/NERSC 10/16/2014— Lithium (Li)-ion batteries power our laptops, tablets, cell phones, and a host of small gadgets and devices. Future automotive applications, however, will need rechargeable batteries with greater energy density, lower cost, and greater safety. Hence the big push in the battery industry to develop an alternative to the Li-ion technology. One promising alternative would be a battery based on a multivalent ion, such as magnesium (Mg). Whereas a Li-ion with a charge of +1 provides a single electron for an electrical current, a Mg-ion has a charge of +2. In principle, that means the same density of Mg-ions can provide twice the electrical current of Li-ions. Mg-ion batteries would also be safer and less expensive than Li-ion batteries. However, the additional charge on a multivalent ion creates other problems: they move more slowly through a circuit. It has long been believed they become surrounded in the battery’s electrolyte by other oppositely charged ions and solvent molecules, slowing their motion and hampering the development of Mg-ion batteries. New findings from a series of computer simulations, however, indicate the problem may be less severe than feared. Results have been published in

Journal of the American Chemical Society.

Read full NERSC press release.

Read full LBNL press release.

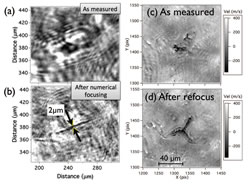

October 14, 2014 — Serendipitous holography reveals hidden cracks in shocked targets

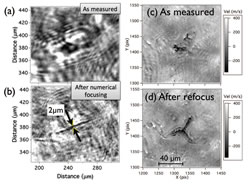

Using a tool called the velocity interferometer (VISAR) in 2D, holographic properties became apparent: numerical processing recovered some 3D information from the 2D image

LLNL 10/14/2014—A new technique for 3D-image processing a high-speed photograph can “freeze” a target’s motion and reveal hidden secrets. This technique, developed at Lawrence Livermore National Laboratory, is particularly applicable in targets that are “shocked.” Shock physics is the study of how matter subjected to a shock wave’s high pressures and densities crack, crumble, and disintegrate. The process uses a velocity interferometer (VISAR)—an instrument in widespread use in national laboratories for many years—in a novel manner. Traditionally, VISAR is used to measure a target along a line (1D measurement) or at a single point (zero-D measurement). The team instead used VISAR in 2D to make snapshot images, using high-resolution detectors similar to those in found in digital cameras, plus an extremely short laser flash to freeze the target motion. When the target accidentally moved, blurring the cracks, the researchers realized it was possible with numerical processing of the data to recover some 3D information from the 2D image—effectively doing holography—to focus features that were originally blurred. The ability to better image tiny cracks that are growing and changing, at short timescales, even when the target is moving and often out of focus, could aid in the future study of materials undergoing brittle fracture after shock loading. The findings are published in

Review of Scientific Instruments.

Read full LLNL press release.

October 14, 2014 — Four Corners methane hotspot points to coal-related sources

Los Alamos National Laboratory measurement instruments were placed in the field for analysis of Four Corners area in U.S. southwest power plant emissions.

LANL 10/14/2014—A large, persistent “hot spot” of methane (natural gas) covering 2,500 square miles (half the area of Connecticut) has existed over the Four Corners intersection of Arizona, Colorado, New Mexico, and Utah since 2003. Remote sensing observations, taken continuously by Los Alamos National Laboratory (LANL) through 2011 and 2012, showed large morning increases of methane, but a European satellite had measured methane in that area daily 2003–2009 before. After seeing that earlier data, a team of LANL, NASA, and University of Michigan scientists followed up with high-resolution regional atmospheric modeling of the current methane emissions for the region reported by the Environmental Protection Agency (EPA). When they compared the simulated methane with both ground and satellite observations, they found the EPA results were two low by a factor of three. The team concludes that the earlier emissions were from coal-bed methane operations that preceded widespread hydraulic fracturing in that area for oil or gas. Methane is 25 times more potent a greenhouse gas than carbon dioxide at trapping heat in the atmosphere and contributes to global warming. The findings were reported in

Geophysical Research Letters.

Read full LANL press release.

October 13, 2014 — Interdisciplinary Institute for Social Sciences to tap new potential of researchers

Credit: ISS http://socialscience.ucdavis.edu/about-iss/iss-news/interdisciplinary-institute-for-social-sciences-to-tap-new-potential-of-researchers-at-uc-davis

UCD10/13/2014—A brand new Institute for Social Sciences at UC Davis will promote interdisciplinary research in the social sciences to address challenges within a rapidly changing society, tackling the explosion of new data from sources as varied as Twitter and neuroscience imaging techniques. As an incubator of new ideas, the institute will support work that reaches across culture, class, social norms, politics, mobility, economics, values, technology, language, communication and history, according to its new director Joseph Dumit, a professor of anthropology and science and technology studies at UC Davis. “Social scientists use data to learn empirically about the forces shaping our practices, our interactions, our knowledge and our decisions,” said Dumit. “We need new, interdisciplinary approaches to make the most of these types of data, since today’s concrete problems don’t respect disciplinary lines.” The institute also will expand the Social Science Data Service, which acquires and curates data on society, to offer a wider variety of databases and help researchers access and more creatively analyze data.

Read full UCD10 press release.

October 13, 2014 — UC Santa Cruz creates new Department of Computational Media

Michael Mateas is chair of the new Department of Computational Media. Credit: C. Lagattuta

UCSC 10/13/2014—With the creation of a Department of Computational Media in the Baskin School of Engineering, UC Santa Cruz has established an academic home for a new interdisciplinary field that is concerned with computation as a medium for creative expression. As a discipline, computational media combines the theories and research approaches of the arts and humanities with those of computer science to analyze, explore, and enable the computer as a medium for creative expression. The new department, which builds on the strong programs UC Santa Cruz has established in computer game design, is the first of its kind in the world, said Michael Mateas, chair of the department and director of the Center for Games and Playable Media at UCSC. In addition to games, other examples of computational media include social media, smart-phone apps, and virtual reality experiences. The common thread among all of them is that they require large amounts of computation and feature a level of interactivity that can only be created through computer technology. Said Mateas: “We’re seeing the birth of a fundamentally new form of expressive communication.”

Read full UCSC press release.

October 10, 2014 — Randles receives National Institutes of Health award to pursue cancer research

Lawrence Livermore computational scientist Amanda Randles received a Director’s Early Independence Award from the National Institutes of Health.

LLNL 10/10/2014— Lawrence Livermore National Laboratory computational scientist Amanda Randles received a Director’s Early Independence Award from the National Institutes of Health (NIH) to pursue research to develop a way of predicting likely sites for cancer to metastasize—a method that combines personalized massively parallel computational models and experimental approaches. “Building a detailed, realistic model of human blood flow is a formidable mathematical and computational challenge requiring large-scale fluid models as well as explicit models of suspended bodies like red blood cells,” Randles said. “This will require high-resolution modeling of cells in the blood stream, and necessitate significant computational advances.” The goal is to develop a method to simulate flow of realistic levels of cells through the circulatory system, thereby gaining insight into mechanisms that underlie disease progression and localization. Randles will receive about $2.5 million over five years; the NIH Common Fund award provides funding to encourage exceptional young scientists to pursue “high risk, high reward” independent research in biomedical and behavioral science.

Read full LLNL press release.

![]()

.jpg)