HiPACC Data Science Press Room. From: UCSB

The Data Science Press Room highlights computational and data science news in all fields *outside of astronomy* in the UC campuses and DOE laboratories comprising the UC-HiPACC consortium. The wording of the short summaries on this page is based on wording in the individual releases or on the summaries on the press release page of the original source. Images are also from the original sources except as stated. Press releases below appear in reverse chronological order (most recent first).

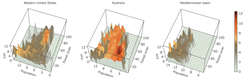

November 5, 2014 — Coexist or perish

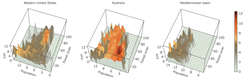

Relationship between forest cover, population density and area burned in fire-prone regions. Forest cover is the percentage area covered by trees higher than 5 meters per cell in 2000; population is number of people per cell (log transformed) in 2000; and fire is total area burned in hectares per cell (log transformed) between 1996 and 2012. The color scale for fire is to help differentiate higher peaks in area burned.

UCB/UCSB 11/5/2014—Many fire scientists have tried to get Smokey the Bear to hang up his “prevention” motto in favor of tools such as thinning underbrush and prescribed burns, which can manage the severity of wildfires while allowing them to play their natural role in certain ecosystems. But a new international research review—led by a specialist in fire at UC Berkeley’s College of Natural Resources and an associate with UC Santa Barbara’s National Center for Ecological Analysis and Synthesis—calls for changes in our fundamental approach to wildfires: from fighting fire to coexisting with fire as a natural process.“We don’t try to ‘fight’ earthquakes: we anticipate them in the way we plan communities, build buildings and prepare for emergencies,” explained lead author Max A. Moritz, UCB. “Human losses will be mitigated only when land-use planning takes fire hazards into account in the same manner as other natural hazards such as floods, hurricanes and earthquakes.” The review examines research findings from three continents and from both the natural and social sciences. The authors conclude that government-sponsored firefighting and land-use policies actually incentivize development on inherently hazardous landscapes, amplifying human losses over time. The findings were published in

Nature.

Read full UCB release.

Read full UCSB release.

October 27, 2014 — Where did all the oil go?

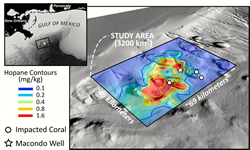

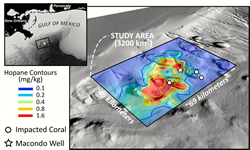

Hydrocarbon contamination from Deepwater Horizon overlaid on sea floor bathymetry, highlighting the 1,250 square mile area identified in the study.

UCSB 10/27/2014—Assessing the damage caused by the 2010 Deepwater Horizon spill in the Gulf of Mexico has been a challenge due to the environmental disaster’s unprecedented scope,. One unsolved puzzle is the location of 2 million barrels of submerged oil thought to be trapped in the deep ocean. UC Santa Barbara’s David Valentine and colleagues from the Woods Hole Oceanographic Institute (WHOI) and UC Irvine have been able to describe the path the oil followed to deposit in a 1,250-square-mile patch on the deep ocean floor. Their findings appear in the

Proceedings of the National Academy of Sciences. For this study, the scientists used data from the Natural Resource Damage Assessment process conducted by the National Oceanic and Atmospheric Administration. The United States government estimates the Macondo well’s total discharge—from the spill in April 2010 until the well was capped that July—to be 5 million barrels. The findings suggest that the undersea deposits came from Macondo oil that was first suspended in the deep ocean and then rained down 1,000 feet to settle on the sea floor without ever reaching the ocean surface.

View UCSB Data Science Press Release

October 23, 2014 — Desert streams: Deceptively simple

Dryland channels exhibit very simple topography despite being shaped by volatile rainstorms. Credit: Katerina Michaelides

UCSB 10/23/2014— Rainstorms drive complex landscape changes in deserts, particularly in dryland channels, which are shaped by flash flooding. Paradoxically, such desert streams have surprisingly simple topography with smooth, straight and symmetrical form that until now has defied explanation. That paradox has been resolved in newly published research by two researchers at UC Santa Barbara’s Earth Research Institute, who modeled dryland channels using data collected from the semi-arid Rambla de Nogalte in southeastern Spain. The pair show that simple topography in dryland channels is maintained by complex interactions among rainstorms, the stream flows the storms generate in the river channel, and sediment grains present on the riverbed. They found that dryland channel width fluctuates downstream. Their observations show that grain size (roughness) also fluctuates from sand to gravel a downstream direction. They also produced simulations of extreme flows to determine the volume of flow necessary to reshape the channel completely. Their findings appear today in the journal

Geology.

View UCSB Data Science Press Release

October 23, 2014 — One giant step for ocean diversity

Scientists at UCSB’s Marine Science Institute use this quad pod camera array to capture underwater images of marine habitats. Credit: Spencer Bruttig

UCSB 10/23/2014—The marine Biodiversity Observation Network (BON) centered on the Santa Barbara Channel where various groups have been gathering a breadth of scientific data ranging from intertidal monitoring to physical oceanographic measurements. Existing diversity data streams will be augmented with new technology and compiled into a comprehensive dataset on species richness. Funded with $5 million by NASA, the Bureau of Ocean Energy Management (BOEM) and the National Oceanic and Atmospheric Administration (NOAA), and led by UC Santa Barbara, the prototype marine BON is designed to fill a gap not addressed by NASA’s own Group on Earth Observations (GEO) BON. The prototype marine BON, which focuses on terrestrial biomes, seeks to eventually cover a huge range of biodiversity in the oceans. Ultimately, the body of knowledge it amasses will mesh with essential biodiversity variables (EBVs), the metrics adopted by NASA’s GEO BON to measure biodiversity across the Earth. In this way, the measurements generated through the marine BON may one day be part of a global assessment of biodiversity.

View UCSB Data Science Press Release

October 9, 2014 — Counting crows—and more

Male Scarlet Tanager (Piranga olivacea), a vibrant songster of eastern hardwood forests. These long-distance migrants move all the way to South America for the winter. Credit: Kelly Colgan Azar

UCSB 10/9/2014—As with the proverbial canary in a coal mine, birds are often a strong indicator of environmental health. Over the past 40 years, many species have experienced their own environmental crisis due to habitat loss and climate change. To fully understand bird distribution relative to environment requires extensive data beyond those amassed by a single institution. Enter DataONE: the Data Observation Network for Earth, a collaboration of distributed organizations with data centers and science networks, including the Knowledge Network for Biocomplexity (KNB) administered by UC Santa Barbara’s National Center for Ecological Analysis and Synthesis (NCEAS). Funded in 2009 as one of the initial NSF DataNet projects, DataONE has enhanced the efficiency of synthetic research—research that synthesizes data from many sources—enabling scientists, policymakers and others to more easily address complex questions about the environment. In its second phase, DataONE will target goals that enable scientific innovation and discovery while massively increasing the scope, interoperability and accessibility of data.

View UCSB Data Science Press Release

October 6, 2014 — The ocean’s future

This San Miguel Island rock wall is covered with a diverse community of marine life. Credit: Santa Barbara Coastal Long-Term Ecological Research Program

UCSB 10/6/2014—Is life in the oceans changing over the years? Are humans causing long-term declines in ocean biodiversity with climate change, fishing and other impacts? At present, scientists are unable to answer these questions because little data exist for many marine organisms, and the small amount of existing data focuses on small, scattered areas of the ocean. A group of researchers from UCSB, the United States Geological Survey (USGS), National Oceanic and Atmospheric Administration (NOAA) National Marine Fisheries Service, and UC San Diego’s Scripps Institution of Oceanography is creating a new prototype system—the Marine Biodiversity Observation Network—to solve this problem. The network will integrate existing data over large spatial scales using geostatistical models and will utilize new technology to improve knowledge of marine organisms. UCSB’s Center for Bio-Image Informatics will use advanced image analysis to automatically identify different species including fish. In addition to describing patterns of biodiversity, the project will use mathematical modeling to examine the value of information on biodiversity in making management decisions as well as the cost of collecting that information in different ways. The five-year $5 million project will center on the Santa Barbara Channel, but the long-term goal is to expand the network around the country and around the world to track over time the biodiversity of marine organisms, from microbes to whales.

View UCSB Data Science Press Release

September 29, 2014 — At the interface of math and science

Model of vesicle adhesion, rupture and island dynamics during the formation of a supported lipid bilayer (from work by Atzberger et al.) featured on the cover of the journal Soft Matter. Credit: Peter Allen

UCSB 9/29/2014—New mathematical approaches—developed by Paul Atzberger, UC Santa Barbara professor of mathematics and mechanical engineering and his graduate student Jon Karl Sigurdsson, and other coauthors—reveal insights into how proteins move around within lipid bilayer membranes. These microscopic structures can form a sheet that envelopes the outside of a biological cell in much the same way that human skin serves as the body’s barrier to the outside environment. “It used to be just theory and experiment,” Atzberger said. “Now computation serves an ever more important third branch of science. With simulations, one can take underlying assumptions into account in detail and explore their consequences in novel ways. Computation provides the ability to grapple with a level of detail and complexity that is often simply beyond the reach of pure theoretical methods.” Their work was published in

Proceedings of the National Academy of Science (PNAS) and featured on the cover of the journal

Soft Matter.

View UCSB Data Science Press Release

September 16, 2014 — The Exxon Valdez — 25 years later

Oil coated the rocky shoreline after the Exxon Valdez ran aground, leaking 10 to 11 million gallons of crude oil into the Gulf of Alaska on March 24, 1989. Credit: Alaska Resources Library & Information Services

UCSB 9/16/2014—UC Santa Barbara’s National Center for Ecological Analysis and Synthesis (NCEAS) has collaborated with investigators from Gulf Watch Alaska and the Herring Research and Monitoring Program to collate historical data from a quarter-century of monitoring studies on physical and biological systems altered by the 1989 Exxon Valdez oil spill. Now, two new NCEAS working groups will synthesize this and related data and conduct a holistic analysis to answer pressing questions about the interaction between the oil spill and larger drivers such as broad cycles in ocean currents and water temperatures. Both statistical and modeling approaches will be used to understand both mechanisms of change and the changes themselves, and to create an overview of past changes and potential futures for the entire area. The investigators will use time series modeling approaches to determine the forces driving variability over time in these diverse datasets. They will also examine the influences of multiple drivers, including climate forcing, species interactions and fishing. By evaluating species’ life history attributes, such as longevity and location, and linking them to how and when each species was impacted by the spill, the researchers may help predict ecosystem responses to other disasters and develop monitoring strategies to target vulnerable species before disasters occur.

View UCSB Data Science Press Release

September 5, 2014 — When good software goes bad

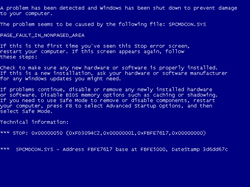

This so-called Blue Screen of Death is often the result of errors in software. Credit: Courtesy Image

UCSB 9/5/2014—With computing distributed across multiple machines on the cloud, errors and glitches are not easily detected before software is rolled out to the public. As a result, bugs manifest themselves after the programs have been downloaded, costing a software company time, money and even user confidence, and it can leave devices vulnerable to security breaches. With a grant of nearly $500,000 from the National Science Foundation, UC Santa Barbara computer scientist Tevfik Bultan and his team are studying verification techniques that can catch and repair bugs in code that manipulates and updates data in web-based software applications. Using techniques that translate software data into code that can be evaluated with mathematical logic, Bultan’s team can verify the soundness of any particular software. By automating the process and adding steps to update the software as needed, crashes, perpetuated errors, vulnerabilities and other glitches will take up less time and money.

View UCSB Data Science Press Release

August 4, 2014 — The best of both worlds

Stefano Tessaro. Credit: Sonia Fernandez

UCSB 8/04/2014—Security standardization is a double-edged sword. An encryption algorithm that gets recognized by an authority such as the National Institute of Standards and Technology (NIST) will be put into wide use, even embedded into chips that are built into computers. That’s great for efficiency and reliability—but if there’s a successful attack, the vast majority of the world’s electronic communications are suddenly vulnerable to decryption and hacking. Moreover, the cost of security is speed: the most secure cryptographic algorithms are not the fastest. Funded by a $500,000 grant from the National Science Foundation’s Secure and Trustworthy Cyberspace program, UC Santa Barbara cryptologist Stefano Tessaro and his team will study what it would take to devise algorithms researchers know to be secure while maintaining the level of service (i.e. speed) internet users have come to expect.

View UCSB Data Science Press Release

June 18, 2014 — A cure for Alzheimer’s requires a parallel team effort

Kenneth S. Kosik. Credit: Spencer Bruttig

UCSB 6/18/14—For the more than 5 million Americans and 35 million people worldwide suffering from Alzheimer’s disease, the rate of progress in developing effective therapeutics has been unacceptably slow. To address this urgent need, UC Santa Barbara’s Kenneth S. Kosik and colleagues are calling for a parallel drug development effort in which multiple mechanisms for treating Alzheimer’s are investigated simultaneously rather than the current one-at-a-time approach. In an article published today in Science Translational Medicine, the research team—Kosik, Andrew W. Lo and Jayna Cummings of the MIT Sloan School of Management’s Laboratory for Financial Engineering, and Carole Ho of Genentech, Inc.—presents a simulation of a hypothetical megafund devoted to bringing Alzheimer’s disease therapeutics to fruition. To quantify potential cost savings, the authors used projections developed by the Alzheimer’s Association and found that savings could range from $813 billion to $1.5 trillion over a 30-year period, more than offsetting the cost of a $38 billion megafund. Given that Alzheimer’s disease has the potential to bankrupt medical systems—the Alzheimer’s Association projects that the costs of care could soar to $1 trillion in the U.S. by 2050—governments around the world have a strong incentive to invest more heavily in the development of Alzheimer’s disease therapeutics and catalyze greater private-sector participation.

View UCSB Data Science Press Release

May 14, 2014 — The state of rain

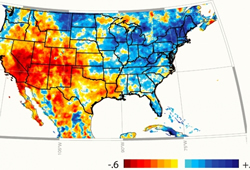

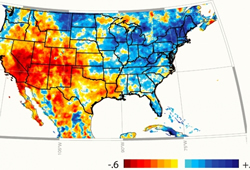

Spring–summer rainfall trends for the U.S.

UCSB 5/14/14—A new dataset developed in partnership between UC Santa Barbara and the U.S. Geological Survey (USGS) can be used for environmental monitoring and drought early warning. The Climate Hazards Group Infrared Precipitation with Stations (CHIRPS), a collaboration between UCSB’s Climate Hazards Group and USGS’s Earth Resources Observation and Science (EROS) couples rainfall data observed from space with more than three decades of rainfall data collected at ground stations worldwide. The new dataset allows experts who specialize in the early warning of drought and famine to monitor rainfall in near real-time, at a high resolution, over most of the globe. CHIRPS data can be incorporated into climate models, along with other meteorological and environmental data, to project future agricultural and vegetation conditions.

View UCSB Data Science Press Release

April 29, 2014 — Future effects

UCSB’s Theodore Kim gives his acceptance speech at the Academy of Motion Picture Arts and Sciences’ Scientific and Technical Achievement Awards. (Credit: Greg Harbaugh / ©A.M.P.A.S.)

UCSB 4/29/14 — What will movies look like 100 years from now? Has the prevailing effects technology known as CGI (computer-generated imagery) already peaked, or is it just getting started? And is it making high-end effects more accessible or isolating lesser-budgeted potential graphics auteurs? These are among the questions that Theodore Kim asks in the Plous Lecture at UC Santa Barbara. In 2013, Kim won an Academy Award in Technical Achievement in recognition of software that he (with three others) developed as a post-doc and that has become an industry-standard technique for smoke and fire effects. His Plous Lecture also revisits a point he made in his Oscar acceptance speech: that academia is the frequent, yet under-credited, birthplace of innovations that are enabling big changes in filmmaking.

View UCSB Data Science Press Release

April 23, 2014 — Superconducting qubit array points the way to quantum computers

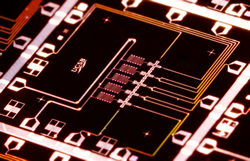

The five cross-shaped devices are the Xmon variant of the transmon qubit placed in a linear array. (Credit: Erik Lucero)

UCSB 4/23/14 — A fully functional quantum computer is one of the holy grails of physics. Unlike conventional computers, the quantum version uses qubits (quantum bits), which make direct use of the multiple states of quantum phenomena. When realized, a quantum computer will be millions of times more powerful at certain computations than today’s supercomputers. A group of UC Santa Barbara physicists has moved one step closer to making a quantum computer a reality by demonstrating a new level of reliability in a five-qubit array. Their findings appeared in the journal "Nature".

View UCSB Data Science Press Release

April 1, 2014 — The Meeting Point

MORPHLOW: created by a student in UCSB’s Media Arts and Technology Program using scientific, computational algorithms for biological rules translated into a physical object via 3D printer. (Credit: R.J. Duran)

UCSB 4/1/14 — Scholars have frequently suggested that art and science find their meeting point in method. That idea could be the tagline for the Interrogating Methodologies symposium taking place at UC Santa Barbara on April 18 and 19. The symposium explores boundaries in art and science and seeks to initiate conversation among specialists from the sciences, social sciences, humanities and arts, all of whom grapple with questions about how those communities intersect.

View UCSB Data Science Press Release

March 26, 2014 — Here today, gone to meta

UCSB Library eyes digital curation service to help preserve research data created across campus.

UCSB 3/26/14 — With technology advancing at warp speed and data proliferating apace, can the scientific and scholarly output survive into the future? Enter Data Curation @ UCSB, an effort to address that very problem on campus. “There is now an expectation that if you are gathering data, it will be available right now, it will always be available, and it will be available in a digital form where I can use it,” said Greg Janée, a digital library research specialist at ERI and Data Curation @ UCSB project lead. “Keeping bits alive is a much higher-tech problem than keeping books alive,” added James Frew, professor of environmental informatics at UCSB’s Bren School of Environmental Science & Management and Janée’s research partner on the project. “Culture guarantees we’ll be able to read English in 300 years, but nothing guarantees we’ll be able to read a CD-ROM in even 10 years.” Now in its second year, the pilot project is using insights from a launch-year faculty survey to shape the inaugural iteration of a data curation service to be based at the UCSB Library.

View UCSB Data Science Press Release

March 25, 2014 — Could closing the high seas to fishing save migratory fish?

Global map of exclusive economic zones (green) and high seas (blue) oceanic areas (Credit: Courtesy photo)

UCSB 3/25/14 — Wild fish are in peril worldwide, particularly in international waters. Operating as a massive unregulated global commons where any nation can take as much as it wants, the high seas are experiencing a latter-day “tragedy of the commons,” with the race for fish depleting stocks of tuna, billfish and other high-value migratory species. A new paper by Christopher Costello, a professor of resource economics at UC Santa Barbara’s Bren School of Environmental Science & Management, and a coauthor, suggests a bold approach to reversing this decline: close the high seas to fishing. The researchers developed a computer simulation model of global ocean fisheries and used it to examine a number of management scenarios, including a complete closure of fishing on the high seas. The model tracked the migration and reproduction of fish stocks in different areas, and quantified the fishing pressure or activity, catch, and profits by each fishing nation under various polices. They found that closing the high seas could more than double both populations of key species and fisheries profit levels, while increasing fisheries yields by more than 30%.

View UCSB Data Science Press Release

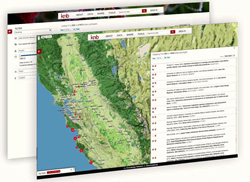

March 12, 2014 — NCEAS upgrades widely used scientific data repository

The upgraded KNB Data Repository features a new user interface and improved search function

UCSB 3/12/14 — With small labs, field stations and individual researchers collectively producing the majority of scientific data, the task of storing, sharing and finding the millions of smaller datasets requires a widely available, flexible and robust long-term data management solution. This is especially true now that the National Science Foundation (NSF) — and a growing number of scientific journals require authors to openly store and share their research data. In response, UC Santa Barbara’s National Center for Ecological Analysis and Synthesis (NCEAS) has released a major upgrade to the KNB Data Repository (formerly the Knowledge Network for Biocomplexity). The upgrade improves access to and better supports the data management needs of ecological, environmental and earth science labs and individual researchers.

View UCSB Data Science Press Release